Java, serverless, compliance, IaC and AWS CDKis available for download.

March 04, 2026

Eclipse GlassFish: This Isn’t Your Father’s GlassFish

by Ondro Mihályi at March 04, 2026 05:04 PM

For years, developers and organizations have held certain beliefs about GlassFish, often based on their experiences with older versions. If you still think of GlassFish as a slow, unsupported, and purely for-development application server, it’s time to take a fresh look. At OmniFish, we’ve been working hard to change that perception since 2022. The Eclipse GlassFish of today, particularly from version 7.0 onwards, is a completely different platform, and we’re proud to show you what we’ve helped to build with the rest of the Eclipse GlassFish contributors.

This article explores the key differences between the modern Eclipse GlassFish and its predecessor, Oracle GlassFish and older Eclipse GlassFish versions. We’ll show you how GlassFish has evolved into a robust, enterprise-grade platform with commercial support from our team at OmniFish, with frequent updates, and a strong commitment to modern Java standards and modern lightweight deployments. In short, this is no longer your father’s GlassFish.

From Gorillas to AWS CDK--airhacks.fm podcast

March 04, 2026 02:53 PM

Subscribe to airhacks.fm podcast via: spotify| iTunes| RSS

March 03, 2026

Preparing for the LLM Era, AIRails.dev and Java 25 Scripting--144th airhacks.tv

March 03, 2026 04:09 PM

"Java 25 scripting is more capable than anticipated and produces shorter code than Python equivalents, LLMs know Java specs by heart due to publicly available specifications and stable APIs, grounding LLMs on JAX-RS and CDI specs eliminates hallucinations, BCE architecture works well with LLM code generation, junior developers can leverage LLMs to learn faster than ever before, Java 26 brings HTTP/3 client and structured concurrency and vector API, web components are the fastest UI technology being native to browsers, zero-dependency Java scripts replace Python and shell scripts, AI Rails skills system allows modular reuse of Java source code, java.util.logging with System.Logger facade is recommended over external logging frameworks, Java 9 JPMS was the main migration blocker from Java 8, measuring AI usage percentage as KPI is as problematic as code coverage metrics, brownfield projects benefit most from LLM assistance with good templates"

Ask questions during the show via twitter mentioning me: https://twitter.com/AdamBien (@AdamBien),using the hashtag: #airhacks or built-in chat at: airhacks.tv. You can join the Q&A session live each first Monday of month, 8 P.M at airhacks.tv

March 01, 2026

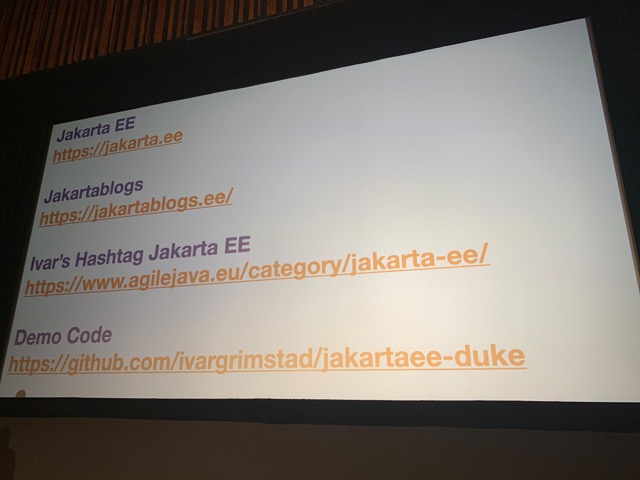

Hashtag Jakarta EE #322

by Ivar Grimstad at March 01, 2026 10:59 AM

Welcome to issue number three hundred and twenty-two of Hashtag Jakarta EE!

This week, I was in Montreal for ConFoo. The conference season is over us, and already next week, I will be in Atlanta for Devnexus. At Devnexus, I will be managing the Jakarta EE booth in addition to speaking and attending the JUG Leader’s Summit and the Java Champions Summit. Devnexus is the premier Java conference in the US. I am looking so much forward to meeting old and new friends there.

Last week, I wrote Will AI Kill Open Source? While writing it, I decided that it is probably going to be enough material for several posts, turning it into a blog series. Stay tuned for the next post in the series.

Finally, it seems like the topic of AI is popping op on the agenda of the Jakarta EE Platform call again. When I started bringing it up about a year ago, there was a fair amount of skepticism so I am happy to see that it was discussed in last week’s call even if I wasn’t present. Things are moving so fast these days and it is important to position Jakarta EE in all of this. Maybe a start could be to create an opinionated MCP server for Jakarta EE. Or maybe a SkillsJar for Jakarta EE? We won’t know unless we discuss it. By the way, SkillsJars is a project by James Ward where skills for agents are packaged as JARs and distributed via Maven Central. I will definitely write more about it in a later post.

February 28, 2026

ConFoo 2026

by Ivar Grimstad at February 28, 2026 04:07 PM

This was my fifth time speaking at ConFoo in Montreal. This year, there were around 800 attendees, which brings them close to the peak years before the pandemic. I am happy to see and experience the vibrant developer community in Montreal. The conference was originally a PHP conference, but now covers multiple languages and technologies. Of course, the ever present topic of AI was prevalent here as well this year. I had some very interesting discussions with Kito Mann and Andrew Lombardi among others.

Amazon offered 500 free credits for Kiro at their booth, so I downloaded it and gave it a try. Kiro offers spec-driven development, and it provided comprehensive high- and low-level designs, requirements, and associated tasks to implement them. First, I prompted it to create an MCP server for Jakarta EE. After about 200 credits, it delivered something. I am not sure if it is actually what I asked for, but it was pretty impressive.

My second try is still running. I gave it the task of providing an implementation of Jakarta Data 1.1 that will work with Apache OpenJPA and passes the TCK. It will be extremely interesting to see how this goes. I will write about it in the blog series about AI and Open Source that I started last week.

My first talk was The Past, Present, and Future of Enterprise Java. It is a good talk and usually gets positive reactions from the attendees. This was no exception. The feedback that was given on the analog paper feedback forms were very good.

The next day, I presented What Spring Developers Should Know About Jakarta EE. This is also a good talk, even if I am not as comfortable with it as the previous one. However, it was extremely well liked and got even better reviews from the attendees. I ran a little out of time at the end as the sessions at ConFoo are 45 minutes, so I am actually looking forward to giving it next week at Devnexus where I will have 60 minutes to my disposal.

On the last day, I had the pleasure of meeting Bazlur. As he pointed out in his post on X, ConFoo is where we meet up every other year when we both are speaking there. Always nice to meet our good community members in person.

February 12, 2026

GlassFish 8 Released: Enterprise-Grade Java, Redefined

by Ondro Mihályi at February 12, 2026 10:07 AM

The next generation of enterprise Java is here. The final version of Eclipse GlassFish 8 landed on 5 February 2026. It’s a big milestone and reinforces GlassFish as a top-tier, enterprise-grade platform for mission-critical systems. Backed by the commercial support and expertise of OmniFish, GlassFish 8 is not just an open-source project; it is a robust, production-ready solution designed for organizations that demand reliability, performance, and innovation.

The final version of Eclipse GlassFish 8.0.0:

- Is Jakarta EE 11 Platform compatible*

- Requires at least Java 21, tested on Java 21 and 25

- Quality assured by OmniFish using a comprehensive test suite

* GlassFish 8.0.0 passed the Jakarta EE 11 TCK and the compatibility request will be raised as soon as the summary for all the tests suites is assembled together. The previous milestone release of GlassFish 8, released in December 2025, is already certified.

This release represents a significant leap forward, with support for the whole Jakarta EE 11 platform, the latest major version of Jakarta EE. GlassFish 8 builds upon the solid foundation of GlassFish 7.1 and introduces a host of new features that empower developers to build scalable, secure, and high-performance applications with ease. GlassFish continues to be at the forefront of the Jakarta EE ecosystem, with frequent updates and a strong commitment to modern Java standards.

Building on a Solid

[…]February 11, 2026

Scaling the Open VSX Registry responsibly with rate limiting

February 11, 2026 08:05 PM

The Open VSX Registry has become widely used infrastructure for modern developer tools. That growth reflects strong trust from the ecosystem, and it brings a shared responsibility to keep the Registry reliable, predictable, and equitable for everyone who depends on it.

In a previous post, I shared an update on strengthening supply-chain security in the Open VSX Registry, including the introduction of pre-publish checks for extensions. This post focuses on the operational side of the same goal: ensuring the Registry remains resilient and sustainable as usage continues to grow.

The Open VSX Registry is free to use, but not free to operate

Operating a global extension registry requires sustained investment in:

- Compute and storage to serve and index extensions at scale

- Bandwidth to deliver downloads and metadata worldwide

- Security to protect users, publishers, and the service itself

- Staff to operate, monitor, secure, and support the Registry

These costs scale directly with usage.

AI-driven usage is scaling faster than ever

Demand on the Open VSX Registry is increasing rapidly, and AI-enabled development is accelerating that trend. A single developer can now orchestrate dozens of agents and automated workflows, generating traffic that previously would have required entire teams. In practical terms, that can mean the equivalent load of twenty or more traditional users, with direct impact on compute, bandwidth, storage, security capacity, and operational oversight.

This is not unique to the Open VSX Registry. It is an industry-wide challenge. Stewards of public package registries such as Maven Central, PyPI, crates.io, and Packagist have recently raised the same sustainability concerns in a joint statement on sustainable stewardship. Mike Milinkovich, Executive Director of the Eclipse Foundation, echoed that message in his post on aligning responsibility with usage.

As reliance on shared open infrastructure grows, sustaining it becomes a collective responsibility across the ecosystem.

Open VSX is critical, and often invisible, infrastructure

Many developers and organisations may not realise how often they rely on the Open VSX Registry. It provides the extension infrastructure behind a growing number of modern developer platforms and tools, including Amazon’s Kiro, Cursor, Google Antigravity, Windsurf, VSCodium, IBM’s Project Bob, Trae, Ona (formerly Gitpod), and others.

If you use one of these tools, you use the Open VSX Registry.

The Open VSX Registry remains a neutral, vendor-independent public service, operated in the open and governed by the Eclipse Foundation for the benefit of the entire ecosystem.

For developers, the expectation is simple: Open VSX should remain fast, stable, secure, and dependable as the ecosystem grows.

As more platforms and automated systems rely on the Registry, continuous machine-driven traffic can place sustained load on shared infrastructure. Without clear operational guardrails, that can affect performance and availability for everyone.

A practical step for sustainable and reliable operations

Usage has shifted from primarily human-driven access to continuous automation driven by CI systems, cloud-based tooling, and AI-enabled workflows. That shift requires operational controls that scale predictably.

Rate limiting provides a structured way to manage high-volume automated traffic while preserving the performance developers expect. It also ensures that operational decisions are based on real usage patterns and that expectations for large-scale consumption are clear and transparent.

Rate limits aren’t entirely new. Like most public infrastructure services, the Open VSX Registry has long had baseline protections in place to prevent sustained high-volume usage from degrading performance for everyone. What’s changing now is that we’re moving from a one-size-fits-all approach to defined tiers that more accurately reflect different usage patterns. This allows us to keep the Registry stable and responsive for developers and open source projects, while providing a clear, supported path for sustained at-scale consumption.

For individual developers and open source projects, day-to-day workflows remain unchanged. Publishing extensions, searching the registry, and installing tools will continue to work as they always have for typical usage.

A measured, transparent rollout

Rate limiting will be introduced incrementally, with an emphasis on platform health and operational stability.

The initial phase focuses on visibility and observation before any limits are adjusted. This includes improved insight into traffic patterns for registered consumers, baseline protections for anonymous high-volume usage, and a monitoring period before any limits are adjusted.

This work is being done in the open so the community can follow what is changing and why. Progress and discussion are tracked publicly in the Open VSX deployment issue:

https://github.com/EclipseFdn/open-vsx.org/issues/5970

What this means for the community

The goal is to keep the Open VSX Registry reliable and fair as it scales, while minimizing impact on normal use.

For most users, nothing should feel different. Developers should see little to no impact, and publishers should not experience disruption to normal publishing workflows. Sustained, high-volume automated consumers may need to coordinate with the Registry to ensure their usage can be supported reliably over time.

Organisations that depend on the Open VSX Registry for sustained or commercial-scale usage are encouraged to get in touch. Coordinating early helps us plan capacity, maintain reliability, and support the broader ecosystem. Please contact the Open VSX Registry team at infrastructure@eclipse-foundation.org.

The intent is not restriction, but clarity in support of fairness, stability, and long-term sustainability.

Looking ahead

Automation is reshaping how developer infrastructure is consumed. Responsible rate limiting is one step toward ensuring the Open VSX Registry can continue to serve the ecosystem reliably as those patterns evolve.

We will continue to adapt based on real-world usage and community input, with the goal of keeping the Open VSX Registry a dependable shared resource for the long term.

February 05, 2026

We’re hiring: improving the services that support a global open source community

February 05, 2026 07:02 PM

The Eclipse Foundation supports a global open source community by providing trusted platforms, services, and governance. As a vendor-neutral organisation, we operate infrastructure that enables collaboration across projects, organisations, and industries.

This infrastructure supports project governance, developer tooling, and day-to-day operations across Eclipse open source projects. While much of it runs quietly in the background, it plays a critical role in the health, security, and sustainability of those projects.

We are expanding the Software Development team with two new roles. Both positions involve contributing to the design, development, and operation of services that are widely used, security-sensitive, and expected to operate reliably at scale.

Software engineer: security and detection

One of the roles is a Software Engineer position with a focus on security and detection engineering, alongside general development and operations.

This role will work on Open VSX Registry, an open source registry for VS Code extensions operated by the Eclipse Foundation. As adoption grows, maintaining the integrity and trustworthiness of the registry requires continuous analysis, detection, and operational safeguards.

In this role, you will:

- Analyse suspicious or malicious extensions and related artefacts

- Develop, test, and maintain YARA rules to detect malicious or policy-violating content

- Design, implement and contribute improvements to backend services, including new features, abuse prevention, rate-limiting, and operational safeguards

This is hands-on work that combines backend development with practical security analysis. The outcome directly improves the reliability, integrity, and operation of services that are part of the developer tooling supply chain.

For more context on this work, see my recent post on strengthening supply-chain security in Open VSX.

To apply:

https://eclipsefoundation.applytojob.com/apply/eXFgacP5SJ/Software-Engineer

Software developer: open source project tooling and services

The second role is a Software Developer position focused on improving the tools and services that support Eclipse open source projects.

This work centres on maintaining and evolving systems that our open source projects and contributors rely on every day. It includes:

- Maintaining and modernising project-facing applications such as projects.eclipse.org, built with Drupal and PHP

- Developing Python tooling to automate internal processes and improve project metrics

- Improving services written in Java or JavaScript that support project governance workflows

As with the Software Engineer role, this position involves contributing to production services. The focus is on incremental improvement, reducing technical debt, and ensuring systems remain maintainable, secure, and reliable as they evolve.

To apply:

https://eclipsefoundation.applytojob.com/apply/mvaSS7T8Ox/Software-Developer

What we are looking for

Across both roles, we are looking for people who:

- Take a pragmatic approach to problem solving

- Are comfortable working in a remote, open source environment

- Value clear documentation and thoughtful communication

- Enjoy understanding how systems work and how to improve them over time

If you are interested in working on open source infrastructure with real users and real impact, we would be happy to hear from you.

January 21, 2026

Jersey 3.1.0 behaviour changes

by Jan at January 21, 2026 03:20 PM

January 16, 2026

The Ultimate 10 Years Java Garbage Collection Guide (2016–2026) – Choosing the Right GC for Every Workload

by Alexius Dionysius Diakogiannis at January 16, 2026 04:19 PM

First published on foojay.io

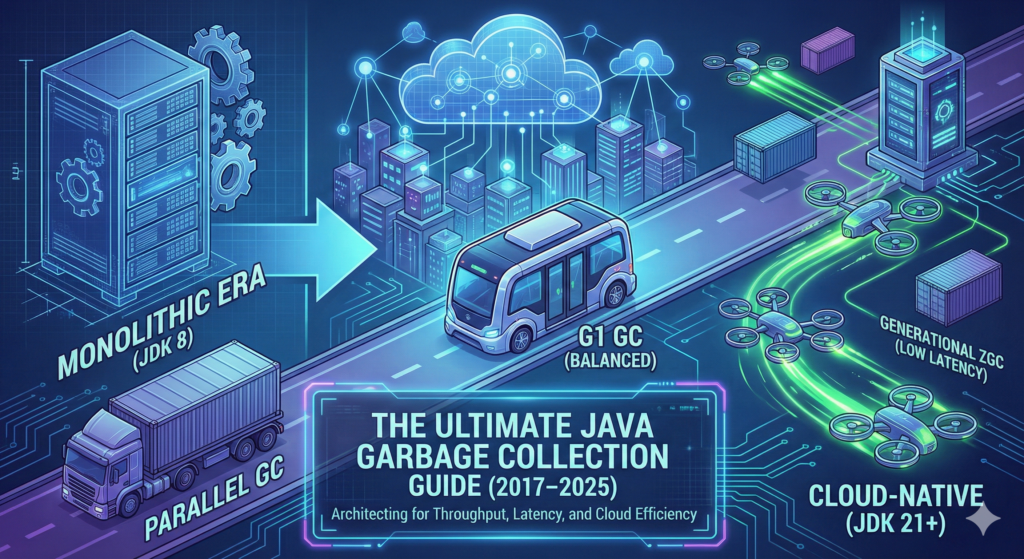

Memory management remains the primary factor for application performance in enterprise Java environments. Between 2017 and 2025, the ecosystem shifted from manual tuning to architectural selection. Industry data suggests that 60 percent of Java performance issues and 45 percent of production incidents in distributed systems stem from suboptimal Garbage Collection (GC) behavior. This guide provides a strategic framework for selecting collectors based on workload characteristics. It covers the transition from legacy collectors to Generational ZGC, analyzing trade-offs regarding throughput, latency, and hardware constraints with mathematical precision.

Introduction

Introduction

The era of “write once, run anywhere” has evolved. In modern cloud-native architectures, you must “tune everywhere.” The migration from bare-metal monoliths to containerized microservices fundamentally changed how the Java Virtual Machine (JVM) interacts with memory.

A collector that performs well for a batch process often fails in a low-latency trading API. Selecting the wrong collector is no longer a minor configuration error. It is an architectural flaw. This flaw leads to cascading latency in microservices, instability in database connections, and wasted cloud resources.

This guide analyzes five primary workload categories. It synthesizes performance data from JDK 8 through JDK 25. It provides a technical decision matrix for Senior Architects and Site Reliability Engineers (SREs).

Workload Analysis and Strategic Selection

We categorize applications based on their resource patterns and business goals. Each category requires a distinct memory management strategy supported by specific mathematical tuning models.

Microservices (Spring Boot/Quarkus)

The Challenge: You must balance Resident Set Size (RSS) efficiency against startup time.

In Kubernetes environments, engineers often restrict pods to fewer than 2 processors and 2 GB of RAM. The JVM ergonomics often default to the Serial GC in these conditions. This happens even if you specify another collector.

The Strategy:

For most microservices, G1 GC is the balanced choice. However, deployment density matters. Research shows that G1 is the most memory-efficient collector for dense environments. In JDK 20 and later, engineers removed one of the marking bitmaps from G1. This reduced its native memory footprint, making it highly suitable for small containers.

Warning on ZGC in Microservices:

Do not blindly apply ZGC to small containers. ZGC requires significant headroom. It typically needs 25 to 35 percent free memory to function without stalling. In an 8 GB container, ZGC images are significantly larger than G1 images. Tests show that ZGC struggles to manage trees of 10 services in constrained RAM. It often fails with Out-Of-Memory (OOM) errors where G1 remains stable.

Legacy JEE (WebLogic/JBoss/Payara)

The Challenge: These systems handle large session states and accumulate legacy memory leaks.

Older applications relied on Concurrent Mark Sweep (CMS). CMS was designed for shorter pauses but required shared processor resources. It was deprecated in JDK 9 and removed in JDK 14.

The Strategy:

G1 GC is the primary successor for these workloads. It handles large heaps up to 128 GB by using region-based incremental collection.

Operators must monitor heap usage after full cycles. If heap usage consistently stays above 85 percent, the application likely has a memory leak. This indicates a code issue rather than a tuning issue.

Stateful UI (Vaadin/JSF)

The Challenge: These frameworks generate numerous medium-lived objects.

User sessions reside in the heap for minutes or hours. This behavior contradicts the “weak generational hypothesis” that most objects die young. Standard configurations often promote these session objects to the Old Generation too quickly. This leads to expensive full-heap collections.

The Strategy:

Tuning the SurvivorRatio is critical here. A standard ratio is 8 to 1. Changing this to 6 to 1 allows objects to stay in the Young Generation longer. Empirical testing shows this reduces premature promotions by 25 to 30 percent. Generational ZGC is also an optimal choice here. It manages mixed collections across generations effectively.

Data Intensive (Spark/Flink/Batch)

The Challenge: The priority is raw throughput.

Batch workloads must complete processing windows quickly. Individual pause times do not matter. A 5-second pause is acceptable if the job finishes 10 minutes earlier.

The Strategy:

Parallel GC (the “throughput collector”) remains the champion. It utilizes all available cores for collection, achieving 95 to 98 percent processing efficiency. However, it requires careful thread configuration on large multi-core servers to prevent OS context-switching overhead.

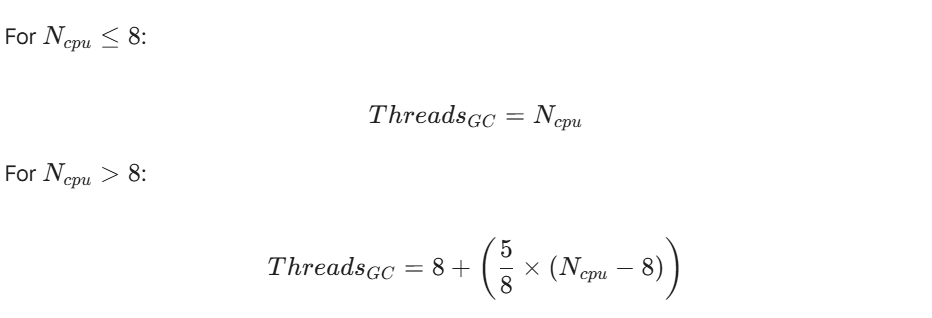

Mathematical Tuning Model: GC Threads

To optimize Parallel GC, explicitly set the thread count (ThreadsGC) based on your available CPU cores (Ncpu).

This formula ensures the GC utilizes resources efficiently without overwhelming the operating system scheduler on massive batch servers.

Ultra-Low Latency

The Challenge: High-frequency APIs require sub-millisecond pauses.

Trading systems cannot tolerate the unpredictable pauses of G1 or Parallel GC. However, low-latency collectors like ZGC race against the application’s object creation. If the application creates objects faster than the collector can clean them, you hit an “Allocation Stall.”

The Strategy:

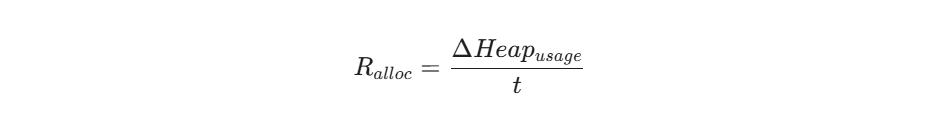

ZGC maintains pause times under 1 millisecond for heaps ranging from 8 GB to 16 TB using colored pointers and load barriers. To ensure stability, you must monitor the Allocation Rate.

Mathematical Tuning Model: Allocation Rate

You must calculate the Allocation Rate (Ralloc) over a time period (t) to determine if your heap headroom is sufficient.

If Ralloc consistently approaches the concurrent collection speed of ZGC, you must either increase the heap size or optimize the code. For modern stacks on JDK 21 or later, Generational ZGC is the superior choice as it handles high allocation rates by frequently clearing the Young Generation, preventing stalls.

Technical Performance Deep Dives

This section explores the specific trade-offs involved in migration and architecture design.

Migration Trade-offs: ParallelOld to ZGC

Migrating from ParallelOld to ZGC is a trade-off between raw speed and predictability.

You trade approximately 7 to 15 percent of raw throughput for a 1000x improvement in pause predictability.

ZGC imposes a “tax” on the system.

- CPU Overhead: ZGC adds an 8 to 20 percent CPU overhead. This comes from the concurrent threads that run alongside your application.

- Cache Efficiency: The use of colored pointers and read barriers impacts the processor cache. L3 cache hit rates often decline by 10 to 15 percent due to pointer metadata operations.

- NUMA Penalty: In Non-Uniform Memory Access (NUMA) architectures, ZGC relocation threads can suffer a 20 to 30 percent performance penalty. You must pin these threads to local memory domains to avoid this.

Microservices and Cumulative Latency

In a microservice architecture, latency accumulates. A single user request often triggers a chain of calls across 5 to 10 services. This creates a “fan-out” effect.

If each service uses a collector like G1 or Parallel, the pauses add up. Cumulative GC pauses across a chain can amplify total latency by 3 to 5 times compared to a monolith.

Using ZGC or Shenandoah dramatically mitigates this. Tests indicate that migrating to low-latency collectors reduces this cascading latency effect by 65 percent. However, this introduces resource contention. The collector competes with application threads for CPU cycles and memory bandwidth.

Database Connectivity Stability

Database connections are heavy, long-lived objects. They test the stability of a collector.

Empirical testing indicates that CMS was historically the most stable collector for database-intensive microservices. It handled the highest number of managed instances before crashing.

Early versions of ZGC (Non-Generational) struggled here. In small container tests with DB connections, non-generational ZGC frequently threw NullPointerException errors. It failed to maintain connectivity due to allocation stalls.

Generational ZGC (JDK 21+) resolves these issues. It frequently collects the young generation where session-related objects reside. This protects the long-lived database connections.

Benchmark: In Apache Cassandra tests, non-generational ZGC failed at 75 concurrent clients. Generational ZGC maintained stability with up to 275 concurrent clients.

Technical Matrix and Decision Logic

Use this data to guide your architectural decisions.

Collector Comparison (JDK 8–25)

| Collector | Supported JDK | Ideal Heap Size | Pause Time Target | CPU Overhead | Key Technology |

| Serial | 8–25 | < 100 MB | N/A (Long STW) | Lowest | Single-threaded STW |

| Parallel | 8–25 | Any | Acceptable STW | Low | Multi-threaded STW |

| G1 | 9–25 (Default) | 6 GB – 128 GB | < 200ms | Medium | Region-based evacuation |

| ZGC | 11–25 | 8 GB – 16 TB | < 1ms | High (8-20%) | Colored pointers |

| Shenandoah | 12–25 | 2 GB – 10 TB | < 10ms | High | Concurrent compaction |

The Decision Tree

Follow this logic to select the correct collector.

Scenario A: The Resource Constraint

Is the environment a tiny container (< 2 cores/2GB RAM) or is the heap < 100 MB?

- Selection: Use Serial GC (

-XX:+UseSerialGC).

Scenario B: The Batch Processor

Is the priority 4–8 hour batch processing windows where total throughput is the only metric?

- Selection: Use Parallel GC (

-XX:+UseParallelGC).

Scenario C: The Generalist

Is the application a general web service with balanced latency and throughput needs? Is the heap-to-container ratio > 80%?

- Selection: Use G1 GC (

-XX:+UseG1GC).

Scenario D: The High-Performance Specialist

Does the application require sub-1ms response times on large heaps (> 32 GB) on JDK 21+? Do you have 25% memory headroom?

- Selection: Use Generational ZGC (

-XX:+UseZGC -XX:+ZGenerational).

Scenario E: The Alternative

Are you on a non-Oracle OpenJDK and require low latency without ZGC multi-mapping?

- Selection: Use Shenandoah GC (

-XX:+UseShenandoahGC).

G1 vs Generational ZGC in 2026

For years, the standard advice was “G1 is always best.” The arrival of Generational ZGC in JDK 21 through JDK 25 challenges this.

The legacy non-generational ZGC suffered from allocation stalls. It had to scan the entire heap to find garbage. This works poorly when an application creates objects faster than the collector can clean them.

Generational ZGC exploits the Weak Generational Hypothesis, most objects die young.

By splitting the heap into generations, it achieves two goals:

- Throughput: It improves throughput by 10 percent compared to its single-generation predecessor.

- Stability: It prevents the allocation stalls that plagued earlier versions in high-concurrency environments.

Architect’s Note:

In JDK 25, G1 remains the most memory-efficient option regarding RSS. For performance-critical stacks on JDK 21+, Generational ZGC should be the baseline, provided you provision the infrastructure with at least 25 percent memory headroom.

The Architect’s Roadmap: Optimization by JDK Version

As a Principal Java Architect, I recognize that being “stuck” on a specific JDK version often involves balancing legacy stability with the need for modern performance. Here is your roadmap for optimization and troubleshooting, depending on which version of the JVM you are currently tethered to.

If you are on Java 8…

- Manage the Metaspace Shift: Since the Permanent Generation was removed in JDK 8, you must monitor your native memory usage for class metadata using

-XX:MaxMetaspaceSize. Avoid the “bad practice” of simply renaming oldMaxPermSizeflags to Metaspace without conducting a fresh analysis of your application’s class-loading needs. - Address the CMS Maintenance Gap: If you are using the Concurrent Mark Sweep (CMS) collector on free builds, be aware that it is no longer maintained and lacks critical backported patches. If performance is degrading, transition to the Parallel GC for throughput or G1 GC for a balance of latency, though be wary that G1 in the Java 8 era utilized significantly more native memory than modern versions.

- Tune for Premature Promotion: If you see high Stop-The-World (STW) durations in the Old generation, increase your

SurvivorRatiofrom the default 8:1 to 6:1. This provides more breathing room for medium-lived objects and can reduce premature promotions by up to 30%. - Leverage Performance Editions: If an upgrade is impossible, consider utilizing specialized runtimes like Liberica JDK Performance Edition, which can provide a ~10% performance boost for legacy workloads.

If you are on Java 11…

- Re-evaluate Inherited Flags: Do not carry over your Java 8 tuning scripts blindly; flags that benefited the Parallel collector often conflict with the G1 GC heuristics now active by default. For example, manually setting the young generation size can prevent G1 from accurately meeting its

MaxGCPauseMillistargets. - Be Cautious with Experimental ZGC: While ZGC was introduced in JDK 11, it was experimental and limited to Linux. It lacks generational capabilities in this version, making it highly susceptible to allocation stalls if your application’s allocation rate is high.

- Monitor G1 Native Footprint: G1 was significantly improved in JDK 11 to reduce its native memory overhead, which was a major complaint in earlier versions. Use Native Memory Tracking (NMT) with

-XX:NativeMemoryTracking=summaryto ensure your container limits are not being breached by the collector’s internal data structures.

If you are on Java 17…

- Commit to ZGC for Large Heaps: Since JDK 15, ZGC has been production-ready and is the primary choice for heaps ranging from 8 GB to 16 TB where sub-millisecond latency is required. However, ensure you have 15-25% memory headroom beyond your peak working set to accommodate ZGC’s concurrent relocation work and metadata.

- Enable Huge Pages: On Linux, enable Transparent Huge Pages (THP) or explicit large pages to achieve a “free lunch” performance boost of approximately 10%.

- Transition from CMS: If you are migrating from Java 8/11 to 17, remember that CMS was removed in JDK 14. You must move to G1 or ZGC; G1 is typically the most stable choice for memory-constrained environments where the heap-to-container ratio exceeds 80%.

- Use Modern Diagnostics: Utilize JDK Flight Recorder (JFR) for profiling with less than 2% overhead to identify fine-grained allocation patterns and object creation rates.

If you are on Java 21…

- Activate Generational ZGC: This is the most significant change in modern JVM performance. Use the flags

-XX:+UseZGC -XX:+ZGenerationalto handle high allocation rates that would have caused stalls in earlier versions. In benchmarks like Apache Cassandra, this version remains stable with up to 275 concurrent clients, whereas the non-generational version often failed at 75. - Exploit the Weak Generational Hypothesis: Generational ZGC improves throughput by 10% compared to legacy ZGC by focusing its collection efforts on the young generation where most objects “die young”.

- Leverage G1 Efficiency: If your environment is extremely memory-constrained, the G1 collector in JDK 21 is more efficient than ever, as it now requires only one marking bitmap instead of two, significantly reducing its Resident Set Size (RSS).

- Adopt Compact Object Headers: Consider enabling the compact object headers feature (experimental in 24, but maturing in late 21 updates) to reduce the memory footprint of every object on the heap, improving overall throughput.

Analogy: Navigating Java versions is like maintaining a building’s HVAC system. Java 8 is an old boiler where you must manually watch the pressure gauges (Metaspace and PermGen). Java 11 and 17 are modern units that work well but require you to clear out the old filters (inherited flags) to be effective. Java 21 is a smart climate control system: by enabling Generational ZGC, the system finally becomes intelligent enough to focus its energy only on the rooms currently in use (the young generation), saving you massive amounts of manual labor and resource cost.

Conclusion

There is no single “best” collector. There is only the right collector for your specific constraints.

Parallel GC is a massive double-decker bus. It carries the most passengers (throughput) but blocks all traffic when it stops. G1 is a fleet of mid-sized shuttles. They cause frequent but short delays. Generational ZGC is a network of drones. They deliver instantly, but they consume more energy and space to operate.

Align your JVM configuration with your business goals. Monitor your allocation rates using the equations provided. And most importantly, stop treating memory management as an afterthought.

References

- Gullapalli, V. (2025). Adaptive JVM Optimization: Charting the Path from ParallelOld to ZGC Excellence. Al-Kindi Publisher. Journal of Computer Science and Technology Studies, 7(8).

- Edelveis, C. (2024). An Overview of Java Garbage Collectors. BellSoft Corporation.

- Cai, Z., Blackburn, S. M., Bond, M. D., & Maas, M. (2022). Distilling the Real Cost of Production Garbage Collectors. arXiv:2204.06782.

- Johansson, S. (2023). Garbage Collection in Java: Choosing the Correct Collector. Oracle Corporation (Java YouTube Channel).

- Oracle Corporation. (2023-2025). Java Platform, Standard Edition HotSpot Virtual Machine Garbage Collection Tuning Guide, Release 21. Oracle Help Center.

- Reddit Community. (2021-2025). How to choose the best Java garbage collector. r/java.

- Korando, B. (2023). Introducing Generational ZGC. Inside Java.

- Johansson, S. (2023). JDK 21: The GCs keep getting better. OpenJDK Performance Blog.

- Ericson, A. (2021). Mitigating garbage collection in Java microservices. Mid Sweden University. DiVA portal.

- Canales, F., Hecht, G., & Bergel, A. (2021). Optimization of Java Virtual Machine Flags using Feature Model and Genetic Algorithm. ACM ICPE ’21 Companion.

- Diakogiannis, A. D. (2024). The Generational Z Garbage Collector (ZGC). JEE.gr.

- Diakogiannis, A. D. (2017). Ta Java VM Options pou prepei na ksereis ti kanoun!. JEE.gr.

by Alexius Dionysius Diakogiannis at January 16, 2026 04:19 PM

December 18, 2025

Jakarta EE 2025: a year of growth, innovation, and global engagement

by Tatjana Obradovic at December 18, 2025 02:07 PM

As 2025 comes to a close, it's a great moment to reflect on what we’ve achieved together as the Jakarta EE community. From major platform updates to refreshing the website and growing developer engagement, this year has been full of meaningful progress.

Celebrating Jakarta EE 11

One of our biggest milestones this year was Jakarta EE 11. This time we did the release in a different way: we released as soon as the profile or platforms were ready! The Core Profile was available in December 2024 and Web Profile in March 2025, and Jakarta EE Platform finalised in June 2025, reflecting the steady progress of the Jakarta EE community. Compatible products followed right away!

Jakarta EE 11 introduces the new Jakarta Data specification, delivers a modernised testing experience with updated TCK infrastructure based on JUnit 5 and Maven, and expands support for Java 21, including virtual threads. It also streamlines the platform by retiring older specifications such as Managed Beans, reinforcing Contexts and Dependency Injection (CDI) as the preferred programming model and continues to provide Java Records support.

This release marks a significant step forward in simplifying enterprise Java development, improving developer productivity, and supporting modern, cloud native applications. It's a true reflection of the community’s collaborative efforts and ongoing commitment to innovation.

Read the Jakarta EE 11 announcement

Introducing Jakarta Agentic AI: A New Standard for Running AI Agents on Jakarta EE

This year marked the introduction of the Jakarta Agentic AI specification project. Aimed at standardising how AI agents run within Jakarta EE runtimes, this new specification will be included in a future release. Much like Jakarta Servlet unified HTTP processing and Jakarta Batch defined batch workflows, Jakarta Agentic AI will provide a clear, annotation-driven API that defines how agents are created, managed, and executed.

Built on CDI as the core component model, the specification will establish consistent lifecycle patterns and usage semantics, making it easier for developers to implement and integrate a wide range of agent types. The project also anticipates deep integration with key Jakarta EE APIs, ensuring seamless interoperability across the platform.

Jakarta Agentic AI is being developed with broad industry collaboration in mind. The project is seeking input from subject-matter experts, vendors, and API consumers both inside and outside the Java ecosystem to build the most open, portable, and future-ready agent execution model possible. Visit the project page to learn more about the specification.

Listening and learning through the Jakarta EE Developer Survey

Our annual Jakarta EE Developer Survey remains one of the best ways to track how developers and organisations are using enterprise Java and shaping their cloud strategies. In 2025, we saw a 20% increase in participation, with over 1,700 participants sharing how they use Jakarta EE in practice.

The results show continued growth and confidence in Jakarta EE across the ecosystem. Notably, even before the full platform release was finalised, 18 percent of respondents were already using Jakarta EE 11, a strong signal of interest and early adoption.

These insights help us better understand where the community is focusing its energy, from modernising applications and adopting newer Java versions to evaluating cloud strategies and driving specification innovation. We're grateful to everyone who participated and shared their views.

Explore the 2025 developer survey findings

Learning and contributing: A growing developer ecosystem

The Jakarta EE Learn page expanded its resources to better support developers at all levels. As part of our broader effort to support community growth, we also introduced a new Contribute page, a dedicated space that outlines how individuals and organisations can get involved with Jakarta EE.

The Contribute page highlights the many ways to participate, from writing code and improving documentation to joining specification discussions or helping with community outreach. It also explains why contributing matters, what contributors gain, and how to get started.

To further support newcomers, we launched the Jakarta EE Mentorship Program, which pairs new contributors with experienced community mentors who can provide guidance, answer questions, and help them navigate the contribution process. Whether you're new to open source or simply new to Jakarta EE, the mentorship experience helps build skills, confidence, and deeper community connections.

Looking ahead: A refreshed web presence

Throughout 2025, our marketing team in collaboration with the Jakarta EE Marketing Committee worked on a major Jakarta EE website refresh to better reflect the clarity, maturity, and momentum of the community. While the full launch is now scheduled for early January, the homepage and navigation redesign is already complete and ready for rollout. The updated site features a bold new homepage, improved navigation through streamlined mega menus, and a new “Why Jakarta EE” section that helps visitors quickly understand the platform’s value.

This is just the beginning. Additional updates and structural improvements will continue rolling out through 2026, with a focus on enhancing messaging, navigation, and the overall user experience. Stay tuned for the official launch and more updates in the months ahead.

Global presence: virtual events, conferences, and community connections

Jakarta EE had a visible and impactful presence at face-to-face (F2F) conferences around the world, especially in the first half of the year. From Devnexus to JCON and beyond, Jakarta EE working group and community members presented talks, engaged with attendees at our sponsored booths, and built valuable relationships.

In 2025, JakartaOne Livestreams continued to grow with successful regional events in China and the annual JakartaOne Livestream, which attracted more than 6,000 viewers globally, with over 3,200 participants. With 20+ sessions, 15+ speakers, and 14+ hours of multilingual content, the JakartaOne Livestream series continued to drive strong community engagement across regions. Chinese JakartaOne Livestream recordings, as well as the annual JakartaOne Livestream recording, are available on our YouTube channel for anyone interested.

JakartaOne F2F Meetups further expanded the program’s regional footprint, with events in China and Japan drawing 170+ registered participants and 100+ in-person attendees, supported by high community approval and strong local participation.

With 17 Jakarta EE Tech Talks delivered in 2025, the program remains a vital channel for community learning, collaboration, and inspiration. Topics ranged from microservices and containers to security and observability. Recordings of these sessions are available on our YouTube channel.

Looking forward to 2026 and beyond

As we conclude an impactful 2025, it’s clear that Jakarta EE continues to strengthen its role as the open, vendor-neutral foundation for modern enterprise Java. The progress we’ve made this year, from delivering Jakarta EE 11 and introducing new specifications like Jakarta Agentic AI, to expanding our global events and deepening community engagement, reflects the dedication, collaboration, and passion of everyone involved.

2026 promises to be another exciting year of innovation and growth.

Thank you to all members, contributors, committers, and the wider community for your continued support. Together, we’re driving the platform forward and building a vibrant, open, and innovative ecosystem.

Here’s to another year of progress, collaboration, and innovation with Jakarta EE.

December 11, 2025

Book Review: Kotlin for Java Developers

December 11, 2025 12:00 AM

General information

- Pages: 414

- Published by: Packt

- Release date: October 2025

Disclaimer: I received this book as part of a collaboration with Packt

TL;DR

Essentially, this is a book that uses a "problem-reasoning-solution" approach to present the building blocks that make Kotlin interesting and different from Java. Hence, it isn't:

- A Kotlin reference book (i.e., it does not provide deep technical documentation)

- A book for learning how to program from scratch

In my opinion, it delivers what it promises.

About the book and how I read it

I work with both Java and Kotlin professionally. However, as a technical trainer I'm always looking for educational resources that can boost students' knowledge, either as a main reference or as a complementary resource. I think this book fits the latter category.

Right from the cover, the book states its value proposition:

Confidently transition from Java to Kotlin through hands-on examples and idiomatic Kotlin practices

I believe it achieves that, although at least in the first two chapters the writing style can make the book somewhat hard to read.

A rocket that takes time to launch but can reach Mars

The book is divided into four sections:

- Getting started with Kotlin

- Object-Oriented Programming

- Functional Programming

- Coroutines, Testing and DSLs

My least favorite section was the first, especially the first two chapters. The first chapter tries to give an overview of Kotlin versus Java, but it is too superficial and perhaps even unnecessary. I imagine the goal of this chapter is to spark interest in Kotlin, but it also anticipates that everything will be covered in more detail later. Personally, I almost skipped this chapter because I knew I would see the topics in more depth later. I suppose that's a matter of taste.

Then, the second chapter sketches out Maven and Gradle without going in depth, which felt redundant since the book is targeted at Java developers. I expected more detail in this section about which plugins are used in the build process, how they interact with Maven lifecycles, and other specific topics. But the book delegates this responsibility to the IDE wizard and that's it.

From chapter three onward something magical happens. The book finally launches and its value proposition starts to materialize. Starting in chapter three the writing style changes and consistently presents concept by concept. Almost every chapter is structured like this:

- A common problem is discussed, often respectfully from a Java perspective

- The Kotlin design decision is presented and how it aims to improve the problem

- A concise, self-contained Kotlin snippet explains the programming concept

This last part is what gives the book its value. Studying a programming language — especially when you already know how to program — is a different process than learning to program for the first time. This book recognizes that and discusses Kotlin's value propositions in technical terms, presenting self-contained snippets that readers can try in their IDE or download from the book's official repository.

If I were to use a rocket analogy, imagine that the following chapters are like Apollo 11 in full ascent from Cape Canaveral.

Part I

- Null and non-nullable types

- Extension Functions and the apply function

Part II

- Object-Oriented programming basics

- Generics and variance

- Data and sealed classes

Because this is not a reference book or official documentation, up to this point the book presents each concept well without diving into corner cases — which is fine. With practice, the book can be completed in about a week and provides a solid foundation for moving to the next level, whether that's Android development or Kotlin backend programming.

From Part III there is a noticeable shift: we leave the Java-centric atmosphere and enter idiomatic Kotlin territory. Java-only developers will likely notice this change, as we move into structures that are often too abstract to have direct equivalents in Java, including:

Part III - All this in the "Kotlin way"

- Basics of functional programming

- Lambdas

- Collections and sequences

Part IV

- Coroutines

- Synchronous and asynchronous programming

Finally, once we're in orbit the book presents two topics that are useful for day-to-day development but are not strictly part of the language:

- Kotlin testing

- Domain-specific languages (DSLs)

Things that could be improved

As with any review, this is the most difficult section to write. Besides the first two chapters, I noticed a few things that could cause confusion:

- The null safety chapter omits any mention of Java's

Optional - The coroutines section briefly mentions Virtual Threads but then presents Loom as a separate effort and likens it to Quasar (a library ecosystem). In reality, Virtual Threads are part of Project Loom

- The book inconsistently presents different JDK recommendations across chapters; sometimes it suggests Corretto while other times it simply suggests OpenJDK

- Also on the JVM side, most of the time it suggests Java 17. I imagine this was related to the time of writing. I can say that all samples worked just fine on Java 25 (the latest LTS at the time of this review), so you should be fine using that or Java 21 (officially supported by Kotlin compiler).

Most of these are not deal-breakers, this is still an enyojable book.

Who should read this book?

- Java developers exploring the Kotlin ecosystem, those interested in Android development, or developers considering switching to Kotlin as their primary language

December 01, 2025

The Darkside of AI: Risks and Realities – Talk at Voxxed Days Luxembourg 2025

by Alexius Dionysius Diakogiannis at December 01, 2025 01:11 PM

I’m excited to share the video recording of my talk, “The Darkside of AI: Risks and Realities,” presented at Voxxed Days Luxembourg 2025!

The rapid acceleration of Artificial Intelligence is transforming every aspect of our lives, from how we work and communicate to how we make critical decisions. While the headlines are often filled with the amazing potential and groundbreaking advancements, it’s crucial to pause and critically examine the inherent risks and complexities that come with such powerful technology.

Speaker: Alexius Dionysius Diakogiannis Event: Voxxed Days Luxembourg 2025 Room: AmigaOS

What the Talk Covers

This session went beyond the hype to explore the challenging realities that AI introduces. During the talk, we dove deep into several critical areas:

-

Bias and Fairness: How inherited biases in training data can lead to discriminatory and unfair outcomes, perpetuating societal inequalities.

-

The Ethics of Autonomy: The difficult questions surrounding liability and control as AI systems become more autonomous, especially in high-stakes fields like medicine and transportation.

-

Security Vulnerabilities: Exploring new attack vectors, such as adversarial examples, that can subtly trick AI models, and the risk of AI-driven misinformation campaigns (deepfakes).

-

Socio-economic Disruption: Analyzing the impact of mass automation on the job market and the imperative for proactive reskilling and policy-making.

by Alexius Dionysius Diakogiannis at December 01, 2025 01:11 PM

November 27, 2025

AI Glossary for Java Developers

by Thorben Janssen at November 27, 2025 01:36 PM

The post AI Glossary for Java Developers appeared first on Thorben Janssen.

AI introduces many new terms, acronyms, and techniques you must understand to build a good AI-based system. That makes it hard for many Java developers to learn how to integrate AI into their applications using SpringAI, Langchain4J, or some other library. I ran into the same issue when I started learning about AI. In this...

The post AI Glossary for Java Developers appeared first on Thorben Janssen.

November 26, 2025

Offset and Keyset Pagination with Spring Data JPA

by Thorben Janssen at November 26, 2025 01:20 PM

The post Offset and Keyset Pagination with Spring Data JPA appeared first on Thorben Janssen.

Pagination is a common and easy approach to ensure that huge result sets don’t slow down your application. The idea is simple. Instead of fetching the entire result set, you only fetch the subset you want to show in the UI or process in your business code. When doing that, you can choose between 2...

The post Offset and Keyset Pagination with Spring Data JPA appeared first on Thorben Janssen.

November 18, 2025

SpringBoot 4.0, preview of Jakarta Data 1.1 and more in 25.0.0.12-beta

November 18, 2025 12:00 AM

This beta release adds Spring Boot 4.x support, previews Jakarta Data 1.1 capabilities, and enhances the Netty-based HTTP transport. It also updates Model Context Protocol Server 1.0 and introduces the option to use your own AES-256 key for Liberty password encryption.

The Open Liberty 25.0.0.12-beta includes the following beta features (along with all GA features):

See also previous Open Liberty beta blog posts.

SpringBoot 4.0

Open Liberty currently supports running Spring Boot 1.5, 2.x, and 3.x applications. With the introduction of the new springBoot-4.0 feature, users can now deploy Spring Boot 4.x applications by enabling this feature. While Liberty consistently supports Spring Boot applications packaged as WAR files, this enhancement extends support to both JAR and WAR formats for Spring Boot 4.x applications.

The springBoot-4.0 feature provides complete support for running a Sprint Boot 4.x application on Open Liberty as well as having the capability to thin the application when creating applications in containers.

To use this feature, the user must be running Java 17 or later with EE11 features enabled. If the application uses servlets, it must be configured to use Servlet 6.1. Include the following features in your server.xml file to define the settings.

<features>

<feature>springboot-4.0</feature>

<feature>servlet-6.1</feature>

</features>The server.xml configuration for deploying a Spring Boot application follows the same approach as in earlier Liberty Spring Boot versions.

<springBootApplication id="spring-boot-app" location="spring-boot-app-0.1.0.jar" name="spring-boot-app" />As in earlier versions, the Spring Boot application JAR can be deployed by placing it in the /dropins/spring folder. The springBootApplication configuration in the server.xml file can be omitted when using this deployment method.

Early preview of Jakarta Data 1.1

This release previews three new features of Jakarta Data 1.1: retrieving a subset or projection of entity attributes, the @Is annotation, and Constraint subtype parameters for repository methods that apply basic constraints to repository @Find and @Delete operations.

Previously, repository methods couldn’t limit retrieval of results to subsets of entity attributes (commonly referred to as projections). Now, repository methods can return Java records that represent a subset of an entity. In addition, parameter-based @Find and @Delete methods earlier were not able to filter on conditions other than equality. Now, more advanced filtering can be done in two different ways: typing the repository method parameter with a Constraint subtype or indicating the Constraint subtype by using the @Is annotation.

In Jakarta Data, you write simple Java objects called Entities to represent data, and you write interfaces called Repositories to define operations on data. You inject a Repository into your application and use it. The implementation of the Repository is automatically provided for you!

Start by defining an entity class that corresponds to your data. With relational databases, the entity class corresponds to a database table and the entity properties (public methods and fields of the entity class) generally correspond to the columns of the table. An entity class can be:

-

annotated with

jakarta.persistence.Entityand related annotations from Jakarta Persistence -

a Java class without entity annotations, in which case the primary key is inferred from an entity property named

idor ending withIdand an entity property namedversiondesignates an automatically incremented version column.

Here’s a simple entity,

@Entity

public class Product {

@Id

public long id;

public String name;

public float price;

public float weight;

}After you define the entity to represent the data, it is usually helpful to have your IDE generate a static metamodel class for it. By convention, static metamodel classes begin with the underscore character, followed by the entity name. Because this beta is being made available well before the release of Jakarta Data 1.1, we cannot expect IDEs to generate for us yet. However, we can provide the static metamodel class that an IDE would be expected to generate for the Product entity:

@StaticMetamodel(Product.class)

public interface _Product {

String ID = "id";

String NAME = "name";

String PRICE = "price";

String WEIGHT = "weight";

NumericAttribute<Product, Long> id = NumericAttribute.of(

Product.class, ID, long.class);

TextAttribute<Product> name = TextAttribute.of(

Product.class, NAME);

NumericAttribute<Product, Float> price = NumericAttribute.of(

Product.class, PRICE, float.class);

NumericAttribute<Product, Float> weight = NumericAttribute.of(

Product.class, WEIGHT, float.class);

}The first half of the static metamodel class includes constants for each of the entity attribute names so that you don’t need to otherwise hardcode string values into your application. The second half of the static metamodel class provides a special instance for each entity attribute, from which you can build restrictions and sorting to apply to queries at run time.

The following example is a repository that defines operations that are related to the Product entity. Your repository interface can inherit from built-in interfaces such as BasicRepository and CrudRepository. These interfaces contain various general-purpose repository methods for inserting, updating, deleting, and querying for entities. In addition, you can compose your own operations using the static metamodel and annotations such as @Find or @Delete.

@Repository(dataStore = "java:app/jdbc/my-example-data")

public interface Products extends CrudRepository<Product, Long> {

// Retrieving the whole entity,

@Find

Optional<Product> find(@By(_Product.ID) long productNum);

// The Select annotation can identify a single entity attribute to retrieve,

@Find

@Select(_Product.PRICE)

Optional<Float> getPrice(@By(_Product.ID) long productNum);

// You can return multiple entity attributes as a Java record,

@Find

@Select(_Product.WEIGHT)

@Select(_Product.NAME)

Optional<WeightInfo> getWeightAndName(@By(_Product.ID) long productNum);

static record WeightInfo(float itemWeight, String itemName) {}

// The Select annotation can be omitted if the record component names match the entity attribute names,

@Find

List<WeightPriceInfo> getWeightAndPrice(@By(_Product.Name) String name);

static record WeightPriceInfo(float weight, float price) {}

// Constraint subtypes (such as Like) for method parameters offer different types of filtering,

@Find

Page<Product> named(@By(_Product.Name) Like namePattern,

Order<Product> sorting,

PageRequest pageRequest);

// The @Is annotation also allows you to specify Constraint subtypes,

@Find

@OrderBy(_Product.PRICE)

@OrderBy(_Product.NAME)

List<Product> pricedBelow(@By(_Product.PRICE) @Is(LessThan.class) float maxPrice,

@By(_Product.Name) @Is(Like.class) String namePattern);

@Delete

long removeHeaviest(@By(_Product.WEIGHT) @Is(GreaterThan.class) float maxWeightAllowed);

}See the following example of the application that uses the repository and static metamodel.

@DataSourceDefinition(name = "java:app/jdbc/my-example-data",

className = "org.postgresql.xa.PGXADataSource",

databaseName = "ExampleDB",

serverName = "localhost",

portNumber = 5432,

user = "${example.database.user}",

password = "${example.database.password}")

public class MyServlet extends HttpServlet {

@Inject

Products products;

protected void doGet(HttpServletRequest req, HttpServletResponse resp)

throws ServletException, IOException {

// Insert:

Product prod = ...

prod = products.insert(prod);

// Find one entity attribute:

price = products.getPrice(prod.id).orElseThrow();

// Find multiple entity attributes as a Java record:

WeightInfo info = products.getWeightAndName(prod.id);

System.out.println(info.itemName() + " weighs " + info.itemWeight() + " kg.");

// Filter by supplying a Like constraint, returning only the first 10 results:

Page<Product> page1 = products.named(

Like.pattern("%computer%"),

Order.by(_Product.price.desc(),

_Product.name.asc(),

_Product.id.asc()),

PageRequest.ofSize(10));

// Filter by supplying values only:

List<Product> found = pricedBelow(50.0f, "%keyboard%");

...

}

}For more information about Jakarta Data 1.1, see the following resources:

Note

This beta includes only the Data 1.1 features for entity subsets or projections, the @Is annotation, and Constraint subtypes as repository method parameters that accept basic constraint values. Other new Data 1.1 features are not included in this beta.

Model Context Protocol Server 1.0 updates

The Model Context Protocol (MCP) is an open standard for AI applications to access real-time information from external sources. The Liberty MCP Server feature mcpServer-1.0 allows developers to expose the business logic of their applications, allowing it to be integrated into agentic AI workflows.

This beta release of Liberty includes important updates to the mcpServer-1.0 feature, including session management and easier discovery of the MCP endpoint.

Prerequisites

To use the mcpServer-1.0 feature, it is required to have Java 17 installed on your system.

MCP Session Management

This beta version includes support for the session management function of the MCP specification. This feature enables stateful sessions between client and server, allowing the server to securely correlate multiple requests in the same session for features like tool call cancellation.

When a client connects to the MCP server, a unique session ID is assigned to the client during the initialization phase and returned in the Mcp-Session-Id HTTP response header. For subsequent requests, the client must include the session ID in the Mcp-Session-Id request header.

MCP Endpoint Discoverability

The MCP endpoint is made available at /mcp under the context root of your application, e.g., http://localhost:9080/myMcpApp/mcp. For ease of discovery, the URL of the MCP endpoint for your Liberty hosted application is now output to the server logs on application startup.

The following example shows what you would see in your logs if you have an application called myMcpApp:

I MCP server endpoint: http://localhost:9080/myMcpApp/mcpIn this beta version, the MCP server endpoint is also accessible with a trailing slash(/) at the end, for example, http://localhost:9080/myMcpApp/mcp/.

Liberty MCP Server API Documentation

You can now find API documentation for the Liberty MCP Server feature. The javadoc is located in the io.openliberty.mcp_1.0-javadoc.zip file within your wlp/dev/api/ibm/javadoc directory.

Attach the Liberty MCP Server feature’s Javadoc to your IDE to view descriptions of the API’s methods and class details.

Note: The following packages and interfaces that are documented in the Javadoc are not yet implemented:

-

io.openliberty.mcp.encoders -

io.openliberty.mcp.annotations.OutputSchemaGenerator -

io.openliberty.mcp.annotations.Tool.OutputSchema

For more information about Liberty MCP Server feature mcpServer-1.0, including how to get started with it, see the blog post.

Updates to Netty‑based HTTP transport on Open Liberty

Open Liberty intoduced Netty-based HTTP transport for 25.0.0.11-beta preview. This change replaced the underlying transport implementation for HTTP/1.1, HTTP/2, WebSocket, JMS, and SIP communications. In this beta release, there are some updates added to this feature. It is designed for zero migration impact—your applications and the server.xml file continue to behave as before. We are looking forward and counting on your feedback before GA!

Netty’s event‑driven I/O gives us a modern foundation for long‑term scalability, easier maintenance, and future performance work, all without changing APIs or configuration!

No changes are required to effectively use the current 'All Beta Features' runtime for this release. To help us evaluate parity and performance with real-world scenarios, you can try the following things:

-

HTTP/1.1 and HTTP/2: large uploads or downloads, chunked transfers, compression-enabled content, keep-alive behavior.

-

WebSocket: long-lived communications, backpressure scenarios

-

Timeouts: read/write/keep-alive timeouts under load

-

Access logging: verify formatting and log results compared to previous builds

-

JMS communications: message send/receive throughput, durable subscriptions

Limitations of the Beta release:

HTTP

-

HTTP requests with content length greater than the maximum integer value fails due to internal limitations on request size with Netty.

-

When the HTTP option

maxKeepAliveRequestshas no limit, HTTP 1.1 limits pipelined requests to 50. -

HTTP option

resetFramesWindowis reduced from millisecond to second precision due to limitations in the Netty library. -

Due to internal limitations of the Netty library, the HTTP option

MessageSizeLimitis now adjusted to be capped at the maximum integer value for HTTP/2.0 connections. -

Due to internal differences with Netty, the HTTP option

ThrowIOEForInboundConnectionscould behave differently from the Channel Framework implementation. -

Due to internal limitations of Netty,

acceptThreadandwaitToAcceptTCP options are currently not implemented and are ignored for the current Beta if set. -

Cleartext upgrade requests for HTTP 2.0 with request data are rejected with a 'Request Entity Too Large' status code. A fix is in progress.

WebSocket

Websocket inbound requests can generate FFDC RuntimeExceptions on connection cleanup when a connection is closed from the client side.

SIP

SIP Resolver transport does not use a Netty transport implementation until this release. This issue is resolved in the current beta!

ALPN

Currently, our Netty implementation supports only the native JDK ALPN implementation. Additional information for the ALPN implementations that are currently supported by the Channel Framework but not our Netty beta can be found here

Bring Your Own AES-256 Key

Liberty now allows you to provide a Base64-encoded 256-bit AES key for password encryption.

What’s New?

Previously, Liberty supported the wlp.password.encryption.key property, which accepted a password and derived an AES key through a computationally intensive process. This derivation involved repeated hashing with a salt over many iterations, which added overhead during server startup.

Now you can now supply a pre-generated AES key directly. This eliminates the derivation step, resulting in faster startup times and improved runtime performance when encrypting and decrypting passwords.

Why It Matters

This feature not only improves performance but also prepares for migration from traditional WebSphere. The encoded password format remains the same, and future migration tools will allow you to export keys from traditional WebSphere for use in Liberty.

How to Enable It

-

Obtain a 256-bit AES key - Generate a 256-bit AES key using your own infrastructure and encode it in Base64, or by using securityUtility.

To generate a 256-bit AES key using securityUtility, run the new securityUtility generateAESKey task to:

-

Generate a random AES key:

./securityUtility generateAESKey -

Derive a key from a passphrase:

./securityUtility generateAESKey --key=<password>

-

-

Configure the key in Liberty

Add the following variable in

server.xmlor an included file:<variable name="wlp.aes.encryption.key" value="<your_aes_key>" /> -

Encode your passwords

Use

securityUtility encodewith one of these options:-

Provide the key directly:

./securityUtility encode --encoding=aes --base64Key=<your_base64_key> <password> -

Specify an XML or properties file containing

wlp.aes.encryption.keyorwlp.password.encryption.key. Note: The aesConfigFile parameter now allows users to encode with either of the aes properties:./securityUtility encode --encoding=aes --aesConfigFile=<xml_or_properties_file_path> <password>

-

-

Update configuration

Copy the new encoded values into your server configuration.

Performance Tip: For best results, re-encode all passwords using the new key. Mixed usage of old and new formats is supported for backward compatibility, but full migration ensures optimal performance.

Other command line tasks have been updated to accept Base64 keys and AES configuration files in a similar fashion:

-

securityUtility

-

createSSLCertificate

-

createLTPAKeys

-

encode

-

-

configUtility

-

install

-

-

collective

-

create

-

join

-

replicate

-

Try it now

To try out these features, update your build tools to pull the Open Liberty All Beta Features package instead of the main release. The beta works with Java SE 25, Java SE 21, Java SE 17, Java SE 11, and Java SE 8.

If you’re using Maven, you can install the All Beta Features package using:

<plugin>

<groupId>io.openliberty.tools</groupId>

<artifactId>liberty-maven-plugin</artifactId>

<version>3.11.5</version>

<configuration>

<runtimeArtifact>

<groupId>io.openliberty.beta</groupId>

<artifactId>openliberty-runtime</artifactId>

<version>25.0.0.12-beta</version>

<type>zip</type>

</runtimeArtifact>

</configuration>

</plugin>You must also add dependencies to your pom.xml file for the beta version of the APIs that are associated with the beta features that you want to try. For example, the following block adds dependencies for two example beta APIs:

<dependency>

<groupId>org.example.spec</groupId>

<artifactId>exampleApi</artifactId>

<version>7.0</version>

<type>pom</type>

<scope>provided</scope>

</dependency>

<dependency>

<groupId>example.platform</groupId>

<artifactId>example.example-api</artifactId>

<version>11.0.0</version>

<scope>provided</scope>

</dependency>Or for Gradle:

buildscript {

repositories {

mavenCentral()

}

dependencies {

classpath 'io.openliberty.tools:liberty-gradle-plugin:3.9.5'

}

}

apply plugin: 'liberty'

dependencies {

libertyRuntime group: 'io.openliberty.beta', name: 'openliberty-runtime', version: '[25.0.0.12-beta,)'

}Or if you’re using container images:

FROM icr.io/appcafe/open-liberty:betaOr take a look at our Downloads page.

If you’re using IntelliJ IDEA, Visual Studio Code or Eclipse IDE, you can also take advantage of our open source Liberty developer tools to enable effective development, testing, debugging and application management all from within your IDE.

For more information on using a beta release, refer to the Installing Open Liberty beta releases documentation.

We welcome your feedback

Let us know what you think on our mailing list. If you hit a problem, post a question on StackOverflow. If you hit a bug, please raise an issue.

November 06, 2025

End of Life: Changes to Eclipse Jetty and CometD

by Jesse McConnell at November 06, 2025 03:36 PM

Webtide (https://webtide.com) is the company behind the open-source Jetty and CometD projects. Since 2006, Webtide has fully funded the Jetty and CometD projects through services and support, including migration assistance, production support, developer assistance, and CVE resolution.

First, the change.

Starting January 1, 2026, Webtide will no longer publish releases for Jetty 9, Jetty 10, and Jetty 11, as well as CometD 5, 6, and 7 to Maven Central or other public repositories.

Take a look at the primary announcement if you’re interested.

So, the motivation.

Why we are in this situation now harks back to the beginnings of Webtide. Briefly, Greg Wilkins founded the Jetty project in 1995 as part of a contest created by Sun Microsystems for a new language called Java. For a decade, he and Jan Bartel carefully stewarded the project as part of their consulting company Mort Bay Consulting. Around the Jetty 6 timeframe, in 2006, Webtide was founded as an LLC to evolve the project further commercially. Still, at its core, the goal was to support the incredible community that had developed over the years. When I joined in 2007, we began working to join the Eclipse Foundation. We took steps to formalize our development processes, aiming to add more commercial predictability to the open-source project. Joining the Eclipse Foundation also meant adhering to their rigorous IP policy for both the Jetty codebase and its dependencies, an essential step in improving corporate uptake.

This was also the time for the project to handle the end-of-life process for Jetty 6, while establishing Jetty 7 and Jetty 8. This was the opportunity that Webtide needed to support the project’s development by offering commercial services and support for EOL Jetty 6, while focusing on supporting and funding the future of Jetty 7 and Jetty 8.

It was the crux; after careful consideration, we decided that all commercial support releases would be open-source for the benefit of all. While not a traditional business decision, it aligned with our values and dedication to the community, which was rewarded as the community continued to grow its usage of Jetty.

This worked wonderfully for almost 20 years.

Something shifted…

We started to notice a shift in the community a few years ago. For almost 20 years, the companies we spoke with valued how our support could help them become more successful, with many ultimately becoming customers who truly understood the benefits of supporting open-source. Every single one of them saw the value in releasing EOL releases freely. When I became CEO a decade ago and Webtide became 100% developer-owned and operated, we were able to continue operating in this commercial environment with ease, to such an extent that the future of Webtide and the Jetty project is assured for many years to come.

So what changed? The tone of many companies we spoke to. Increasingly, while explaining the model that served Webtide well for so many years, where I used to hear ‘That makes so much sense, this works great!’, I now hear “So it’s just free? Great, I need to check a box.” Followed up with the galling question “Could you put this policy of yours in writing on your company letterhead?”.

And today?

Twenty years ago, things were different; Maven 2 dominance was emerging, and Maven Central was gaining ubiquity. Managing transitive dependencies was novel in many circles. Managing CVEs in a corporate setting was in its infancy, particularly with Java developer software stacks.

Now, build tooling is diverse, Maven Central is a global central repository system, and corporations should have their own caching repository servers, or they really should! Even JavaEE was rebranded as Jakarta at the Eclipse Foundation. So much change, but the one I’ll highlight is the emergence of business units focused on corporate software policies, complete with BOM files containing ever more metadata and checkboxes to click, managing CVE risks associated with software developed internally. Developers, the primary people Webtide has interacted with over the years, are increasingly far removed from software maintenance activities.