full-stack Java AI with DeepNetts and GPU accelerationis available for download.

April 26, 2024

GIDS 2024

by Ivar Grimstad at April 26, 2024 11:34 AM

My fifth time speaking at GIDS was just as good as the previous ones. The conference has an amazing lineup of high-profile speakers, so it is a great honor to be among them. This year, the conference had 5400 attendees spread over four days with five parallel tracks.

I had a packed room for my talk titled From Spring Boot 2 to Spring Boot 3 with Java 21 and Jakarta EE. One of the great things about this conference, and maybe the main reason why I keep coming back to it, is that the audience is so engaged. They are not afraid to ask relevant questions during as well as after the talk. I am also very often approached while roaming the exhibition hall by attendees that want to talk about the talk or other topics of interest.

In one of the breaks, I was interviewed by Cassandra Chin for Techstrong TV. We chatted about open source and how getting involved in open source can be a game changer for your career as a developer. The interview should air on their website within a couple of days or so.

Bangalore is known as the Garden City of India with green areas spread around everywhere between the buildings. It can be very hot in Bangalore in April. This year, the temperature was in the high thirties (celsius). Luckily the hotel had a pool to soak in after the conference days.

HTTP Patch with Jersey Client on JDK 16+

by Jan at April 26, 2024 11:26 AM

April 23, 2024

Java SE 22 support in 24.0.0.4

April 23, 2024 12:00 AM

The 24.0.0.4 release introduces support for Java SE 22 and includes CVE fixes. We’ve also updated several Open Liberty guides to use MicroProfile Reactive Messaging 3.0, MicroProfile 6.1, and Jakarta EE 10.

In Open Liberty 24.0.0.4:

Check out previous Open Liberty GA release blog posts.

Develop and run your apps using 24.0.0.4

If you’re using Maven, include the following in your pom.xml file:

<plugin>

<groupId>io.openliberty.tools</groupId>

<artifactId>liberty-maven-plugin</artifactId>

<version>3.10.2</version>

</plugin>Or for Gradle, include the following in your build.gradle file:

buildscript {

repositories {

mavenCentral()

}

dependencies {

classpath 'io.openliberty.tools:liberty-gradle-plugin:3.8.2'

}

}

apply plugin: 'liberty'Or if you’re using container images:

FROM icr.io/appcafe/open-libertyOr take a look at our Downloads page.

If you’re using IntelliJ IDEA, Visual Studio Code or Eclipse IDE, you can also take advantage of our open source Liberty developer tools to enable effective development, testing, debugging and application management all from within your IDE.

Support for Java SE 22 in Open Liberty

Java 22 is the latest release of Java SE, released in March 2024. It contains new features and enhancements over previous versions of Java. However, Java 22 is not a long-term support (LTS) release and support for it will be dropped when the next version of Java is supported. It offers some new functions and changes that you are going to want to check out for yourself.

Check out the following feature changes in Java 22:

Take advantage of the new changes in Java 22 in Open Liberty now and get more time to review your applications, microservices, and runtime environments on your favorite server runtime!

To use Java 22 with Open Liberty, just download the latest release of Java 22 and install the 24.0.0.4 version of Open Liberty. Then, edit your Liberty server.env file to point the JAVA_HOME environment variable to your Java 22 installation and start testing today.

For more information about Java 22, see the following resources:

8 guides are updated to use MicroProfile Reactive Messaging 3.0

The following 8 guides are updated to use the MicroProfile Reactive Messaging 3.0, MicroProfile 6.1 and Jakarta EE 10 specifications:

Also, the integration tests in these guides are updated to use Testcontainers. To learn how to test reactive Java microservices in true-to-production environments using Testcontainers, try out the Testing reactive Java microservices guide.

Security vulnerability (CVE) fixes in this release

| CVE | CVSS score by X-Force® | Vulnerability assessment | Versions affected | Version fixed | Notes |

|---|---|---|---|---|---|

7.5 |

Denial of service |

21.0.0.3 - 24.0.0.3 |

24.0.0.4 |

Affects the openidConnectClient-1.0, socialLogin-1.0, mpJwt-1.2, mpJwt-2.0, mpJwt-2.1, jwt-1.0 features |

|

4.7 |

Cross-site scripting |

23.0.0.3 - 24.0.0.3 |

24.0.0.4 |

Affects the servlet-6.0 feature |

For a list of past security vulnerability fixes, reference the Security vulnerability (CVE) list.

Get Open Liberty 24.0.0.4 now

Available through Maven, Gradle, Docker, and as a downloadable archive.

April 22, 2024

Join Live Webinar - AI on Jakarta EE: A Hands-On Exploration of Toolkits and Libraries

by Dominika Tasarz at April 22, 2024 09:00 AM

Join us for our next free virtual event to take the first step towards building AI-powered Jakarta EE applications:

AI on Jakarta EE: A Hands-On Exploration of Toolkits and Libraries

Tuesday, the 30th of April, 2pm BST

Register: https://www.crowdcast.io/c/wfynmcu0b5cm

April 21, 2024

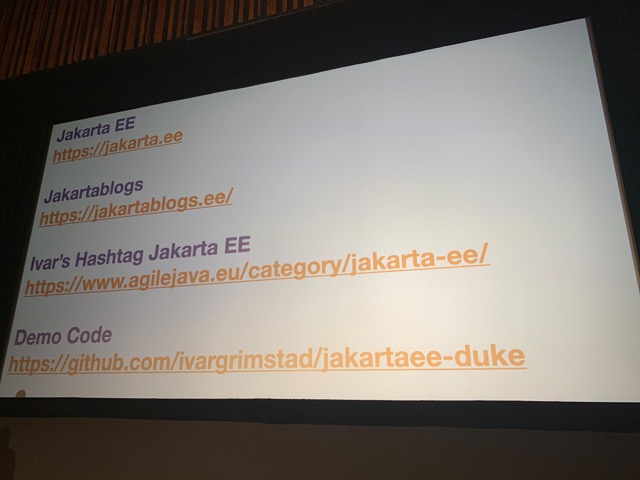

Hashtag Jakarta EE #225

by Ivar Grimstad at April 21, 2024 09:59 AM

Welcome to issue number two hundred and twenty-five of Hashtag Jakarta EE!

I will be in the air on my way to India for my fifth appearance at GIDS when this post is published. I look forward to meeting the Indian Java community again!

Milestone 2 of Jakarta EE 11 has been published. Take a look at the specification documents on the Jakarta EE 11 Specification pages:

– Jakarta EE Platform 11

– Jakarta EE Web Profile 11

– Jakarta EE Core Profile 11

The API artifacts are available in Maven Central with the following coordinates:

Jakarta EE Platform 11 Milestone 2

<dependency>

<groupId>jakarta.platform</groupId>

<artifactId>jakarta.jakartaee-api</artifactId>

<version>11.0.0-M2</version>

</dependency>Jakarta EE Web Profile 11 Milestone 2

<dependency>

<groupId>jakarta.platform</groupId>

<artifactId>jakarta.jakartaee-web-api</artifactId>

<version>11.0.0-M2</version>

</dependency>Jakarta EE Core Profile 11 Milestone 2

<dependency>

<groupId>jakarta.platform</groupId>

<artifactId>jakarta.jakartaee-core-api</artifactId>

<version>11.0.0-M2</version>

</dependency>The component specifications of Jakarta EE 11 are steadily moving through the release reviews and here is the current status. As you can see, there are quite a few that will have their release review started next week.

Done:

– Jakarta Annotations 3.0

– Jakarta Contexts and Dependency Injection 4.1

– Jakarta Expression Language 6.0

– Jakarta Interceptors 2.2

In progress:

– Jakarta RESTful Web Services 4.0

– Jakarta Validation 3.1

About to start:

– Jakarta Authorization 3.0

– Jakarta Data 1.0

– Jakarta Pages 4.0

– Jakarta Persistence 3.2 (starting on Monday)

– Jakarta Servlet 6.1

– Jakarta WebSocket 2.2 (will be restarted shortly)

One of the most exciting features of Jakarta EE 11 is the new Jakarta Data specification. Gavin King has written a two-part article series titled A Preview of Jakarta Data 1.0 about it. Check out Part 1 and Part 2 to understand the rationale of the specification and learn what it is all about.

April 20, 2024

Pure Java AI--airhacks.fm podcast

by admin at April 20, 2024 01:27 PM

Subscribe to airhacks.fm podcast via: spotify| iTunes| RSS

April 19, 2024

How to debug Quarkus applications

by F.Marchioni at April 19, 2024 08:24 AM

In this article, we will learn how to debug a Quarkus application using two popular Development Environments such as IntelliJ Idea and VS Studio. We’ll explore how these IDEs can empower you to effectively identify, understand, and resolve issues within your Quarkus projects. Enabling Debugging in Quarkus When running in development mode, Quarkus, by default, ... Read more

The post How to debug Quarkus applications appeared first on Mastertheboss.

April 18, 2024

How to Quickly Switch Between JDKs Without Tools

by admin at April 18, 2024 04:13 PM

alias j8='export JAVA_HOME=/Library/Java/JavaVirtualMachines/jdk1.8.0_201.jdk/Contents/Home;javaPATH'

alias javaPATH='export PATH=$JAVA_HOME/bin:$PATH'

You can switch to Java 8 by running j8 in your terminal. (Quickly switching to Java 8 is especially useful for code archealogists and historians. 😀)

April 11, 2024

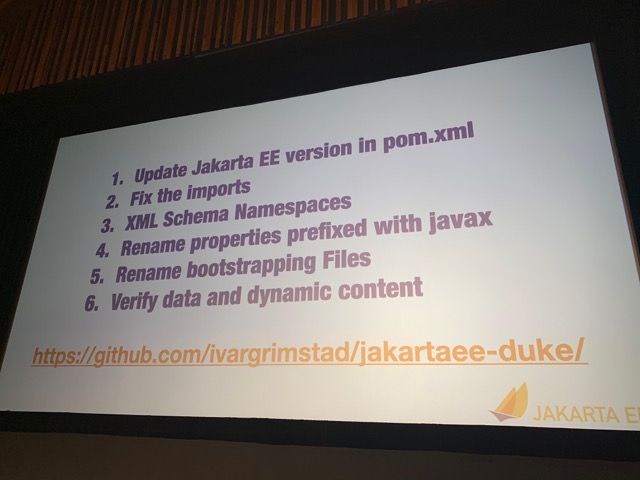

Simplifying migration to Jakarta EE with tools

by F.Marchioni at April 11, 2024 08:44 AM

In this article we will learn some of the available tools or plugins you can use to migrate your legacy Java EE/Jakarta EE 8 applications to the newer Jakarta EE environment. We will also discuss the common challenges that you can solve by using tools rather than performing a manual migration of your projects. Challenges ... Read more

The post Simplifying migration to Jakarta EE with tools appeared first on Mastertheboss.

April 10, 2024

Book Review: DuckDB in Action

by Thorben Janssen at April 10, 2024 08:00 AM

The post Book Review: DuckDB in Action appeared first on Thorben Janssen.

What if I told you that there’s a tool that makes it incredibly easy to process datasets from various sources, analyze them using SQL, and export the result in different formats? And that it doesn’t require all the hassle you know from typical relational databases or a custom implementation. Sounds too good to be true,...

The post Book Review: DuckDB in Action appeared first on Thorben Janssen.

April 09, 2024

Support for Java 22 and an updated preview of Jakarta Data in 24.0.0.4-beta

April 09, 2024 12:00 AM

The 24.0.0.4-beta release introduces support for Java SE 22 and updates for the Jakarta Data 1.0 preview, including improvements for type safety and a new @Find annotation to define repository find methods.

The Open Liberty 24.0.0.4-beta includes the following beta features (along with all GA features):

See also previous Open Liberty beta blog posts.

Run your Open Liberty apps with Java 22

Java 22 is the latest release of Java, released in March 2024. It contains new features and enhancements over previous versions of Java. However, Java 22 is not a long-term support (LTS) release and support for it will be dropped when the next version of Java is supported. It offers some new functions and changes that you are going to want to check out for yourself.

Check out the following feature changes in Java 22:

Take advantage of the new changes in Java 22 in Open Liberty now and get more time to review your applications, microservices, and runtime environments on your favorite server runtime!

To use Java 22 with Open Liberty, just download the latest release of Java 22 and install the 24.0.0.4-beta version of Open Liberty. Then, edit your Liberty server.env file to point the JAVA_HOME environment variable to your Java 22 installation and start testing today. The 24.0.0.4-beta release introduces beta support for Java 22. As we work toward full Java 22 support, please bear with any of our implementations of these functions that might not be ready yet.

For more information about Java 22, see the following resources:

Try out new functions for Jakarta Data 1.0 at milestone 3

Jakarta Data is a new Jakarta EE open source specification that standardizes the popular Data Repository pattern across various providers. Open Liberty 24.0.0.4-beta includes the Jakarta Data 1.0 Milestone 3 release, which introduces the static metamodel and the ability to annotatively compose Find methods.

The Open Liberty beta includes a test implementation of Jakarta Data that we are using to experiment with proposed specification features. You can try out these features and provide feedback to influence the Jakarta Data 1.0 specification as it continues to be developed. The test implementation currently works with relational databases and operates by redirecting repository operations to the built-in Jakarta Persistence provider.

Jakarta Data 1.0 milestone 3 introduces the concept of a static metamodel, which allows for more type-safe usage, and the ability to define repository find methods with the @Find annotation. To use these capabilities, you need an Entity and a Repository.

Start by defining an entity class that corresponds to your data. With relational databases, the entity class corresponds to a database table and the entity properties (public methods and fields of the entity class) generally correspond to the columns of the table. An entity class can be:

-

annotated with

jakarta.persistence.Entityand related annotations from Jakarta Persistence. -

a Java class without entity annotations, in which case the primary key is inferred from an entity property that is named

idor ending withIdand an entity property that is namedversiondesignates an automatically incremented version column.

You define one or more repository interfaces for an entity, annotate those interfaces as @Repository, and inject them into components by using @Inject. The Jakarta Data provider supplies the implementation of the repository interface for you.

The following example shows a simple entity:

@Entity

public class Product {

@Id

public long id;

public boolean isDiscounted;

public String name;

public float price;

@Version

public long version;

}The following example shows a repository that defines operations that relate to the entity. Your repository interface can inherit from built-in interfaces such as BasicRepository and CrudRepository to gain various general-purpose repository methods for inserting, updating, deleting, and querying for entities. However, in this case, we define all of the methods ourselves by using the new life-cycle annotations.

@Repository(dataStore = "java:app/jdbc/my-example-data")

public interface Products extends BasicRepository<Product, Long> {

@Insert

Product add(Product newProduct);

// parameter based query that requires compilation with -parameters to preserve parameter names

@Find

Optional<Product> find(long id);

// parameter based query that does not require -parameters because it explicitly specifies the name

@Find

Page<Product> find(@By("isDiscounted") boolean onSale,

PageRequest<Product> pageRequest);

// query-by-method name pattern:

List<Product> findByNameIgnoreCaseContains(String searchFor, Order<Product> orderBy);

// query via JPQL:

@Query("UPDATE Product o SET o.price = o.price - (?2 * o.price), o.isDiscounted = true WHERE o.id = ?1")

boolean discount(long productId, float discountRate);

}Observe that the repository interface includes type parameters in PageRequest<Product> and Order<Product>. These parameters help ensure that the page request and sort criteria are for a Product entity, rather than some other entity. To enable this function, you can optionally define a static metamodel class for the entity (or various IDEs might generate one for you after the 1.0 specification is released). The following example shows that you can use with the Product entity,

@StaticMetamodel(Product.class)

public class _Product {

public static volatile SortableAttribute<Product> id;

public static volatile SortableAttribute<Product> isDiscounted;

public static volatile TextAttribute<Product> name;

public static volatile SortableAttribute<Product> price;

public static volatile SortableAttribute<Product> version;

// The static metamodel can also have String constants for attribute names,

// but those are omitted from this example

}The following example shows the repository and static metamodel being used:

@DataSourceDefinition(name = "java:app/jdbc/my-example-data",

className = "org.postgresql.xa.PGXADataSource",

databaseName = "ExampleDB",

serverName = "localhost",

portNumber = 5432,

user = "${example.database.user}",

password = "${example.database.password}")

public class MyServlet extends HttpServlet {

@Inject

Products products;

protected void doGet(HttpServletRequest req, HttpServletResponse resp)

throws ServletException, IOException {

// Insert:

Product prod = ...

prod = products.add(prod);

// Find one entity:

prod = products.find(productId).orElseThrow();

// Find all, sorted:

List<Product> all = products.findByNameIgnoreCaseContains(searchFor, Order.by(

_Product.price.desc(),

_Product.name.asc(),

_Product.id.asc()));

// Find the first 20 most expensive products on sale:

Page<Product> page1 = products.find(onSale, Order.by(_Product.price.desc(),

_Product.name.asc(),

_Product.id.asc())

.pageSize(20));

...

}

}To enable the new beta feature in your app, add it to your server.xml file:

<server>

<featureManager>

<feature>data-1.0</feature>

...

</featureManager>

...

<server>Try it now

To try out these features, update your build tools to pull the Open Liberty All Beta Features package instead of the main release. The beta works with Java SE 22, Java SE 21, Java SE 17, Java SE 11, and Java SE 8.

If you’re using Maven, you can install the All Beta Features package by using:

<plugin>

<groupId>io.openliberty.tools</groupId>

<artifactId>liberty-maven-plugin</artifactId>

<version>3.10.2</version>

<configuration>

<runtimeArtifact>

<groupId>io.openliberty.beta</groupId>

<artifactId>openliberty-runtime</artifactId>

<version>24.0.0.4-beta</version>

<type>zip</type>

</runtimeArtifact>

</configuration>

</plugin>You must also add dependencies to your pom.xml file for the beta version of the APIs that are associated with the beta features that you want to try. For example, the following block adds dependencies for two example beta APIs:

<dependency>

<groupId>org.example.spec</groupId>

<artifactId>exampleApi</artifactId>

<version>7.0</version>

<type>pom</type>

<scope>provided</scope>

</dependency>

<dependency>

<groupId>example.platform</groupId>

<artifactId>example.example-api</artifactId>

<version>11.0.0</version>

<scope>provided</scope>

</dependency>Or for Gradle:

buildscript {

repositories {

mavenCentral()

}

dependencies {

classpath 'io.openliberty.tools:liberty-gradle-plugin:3.8.2'

}

}

apply plugin: 'liberty'

dependencies {

libertyRuntime group: 'io.openliberty.beta', name: 'openliberty-runtime', version: '[24.0.0.4-beta,)'

}Or if you’re using container images:

FROM icr.io/appcafe/open-liberty:betaOr take a look at our Downloads page.

If you’re using IntelliJ IDEA, Visual Studio Code or Eclipse IDE, you can also take advantage of our open source Liberty developer tools to enable effective development, testing, debugging, and application management all from within your IDE.

For more information on using a beta release, refer to the Installing Open Liberty beta releases documentation.

We welcome your feedback

Let us know what you think on our mailing list. If you hit a problem, post a question on StackOverflow. If you hit a bug, please raise an issue.

March 29, 2024

The Payara Monthly Catch - March 2024

by Chiara Civardi (chiara.civardi@payara.fish) at March 29, 2024 08:00 AM

Ahoy, Payara Community! Here's an overview of our fresh catch for March, where we've reeled in our favorite bits from the depths for you to enjoy and power up your Jakarta EE applications!

by Chiara Civardi (chiara.civardi@payara.fish) at March 29, 2024 08:00 AM

March 26, 2024

Getting Started with Jakarta Data API

by F.Marchioni at March 26, 2024 10:15 AM

Jakarta Data API is a powerful specification that simplifies data access across a variety of database types, including relational and NoSQL databases. In this article you will learn how to leverage this programming model which will be part of Jakarta 11 bundle. Overview of Jakarta Data API Jakarta.Data API: Offers a higher-level abstraction for data ... Read more

The post Getting Started with Jakarta Data API appeared first on Mastertheboss.

March 15, 2024

How to use a Datasource in Quarkus

by F.Marchioni at March 15, 2024 06:00 PM

This article will teach you how to use a plain Datasource resource in a Quarkus application. We will create a simple REST Endpoint and inject a Datasource in it to extract the java.sql.Connection object. We will also learn how to configure the Datasource pool size. Quarkus Connection Pool Quarkus uses Agroal as connection pool implementation ... Read more

The post How to use a Datasource in Quarkus appeared first on Mastertheboss.

March 08, 2024

Database Modeling Tutorial Using PlantUML

by Alexius Dionysius Diakogiannis at March 08, 2024 05:17 PM

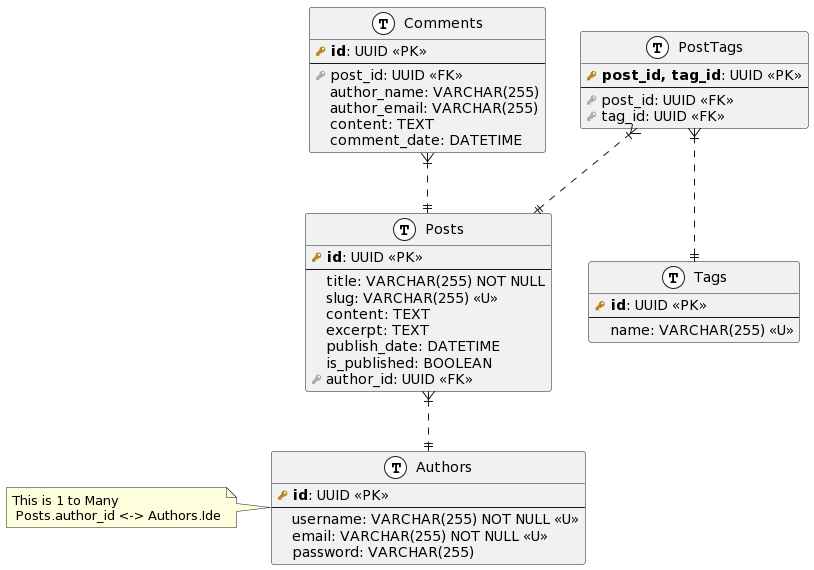

In this tutorial, we’ll explore the database modeling aspect of a small blog using PlantUML. We’ll start by defining the tables and their attributes, then establish relationships between them based on the provided database model.

1. Understanding the Database Model

Let’s review the database model for our small blog:

Tables:

- Posts: Represents individual blog posts.

- Users: Stores user information for blog authors.

- Comments: Contains comments left by users on blog posts.

- Tags: Stores tags associated with blog posts.

- PostTags: Join table to establish a many-to-many relationship between posts and tags.

Relationships:

- Posts – Users: One-to-many relationship where a post belongs to one user.

- Posts – Comments: One-to-many relationship where a post can have many comments.

- Posts – Tags: Many-to-many relationship facilitated by the PostTags join table.

- Tags – Posts: Many-to-many relationship facilitated by the PostTags join table.

2. Creating the PlantUML Diagram

Now, let’s represent the database model using PlantUML:

@startuml

!define primary_key(x) <b><color:#b8861b><&key></color> x</b>

!define foreign_key(x) <color:#aaaaaa><&key></color> x

!define column(x) <color:#efefef><&media-record></color> x

!define table(x) entity x << (T, white) >>

table(Posts) {

primary_key(id): UUID <<PK>>

--

column(title): VARCHAR(255) NOT NULL

column(slug): VARCHAR(255) <<U>>

column(content): TEXT

column(excerpt): TEXT

column(publish_date): DATETIME

column(is_published): BOOLEAN

foreign_key(author_id): UUID <<FK>>

}

table(Authors) {

primary_key(id): UUID <<PK>>

--

column(username): VARCHAR(255) NOT NULL <<U>>

column(email): VARCHAR(255) NOT NULL <<U>>

column(password): VARCHAR(255)

}

table(Comments) {

primary_key(id): UUID <<PK>>

--

foreign_key(post_id): UUID <<FK>>

column(author_name): VARCHAR(255)

column(author_email): VARCHAR(255)

column(content): TEXT

column(comment_date): DATETIME

}

table(Tags) {

primary_key(id): UUID <<PK>>

--

column(name): VARCHAR(255) <<U>>

}

table(PostTags) {

primary_key("post_id, tag_id"): UUID <<PK>>

--

foreign_key(post_id): UUID <<FK>>

foreign_key(tag_id): UUID <<FK>>

}

Posts }|..|| Authors

note left of Authors: This is 1 to Many \n Posts.author_id <-> Authors.Ide

Comments }|..|| Posts

PostTags }|..|| Posts

PostTags }|..|| Tags

@enduml

After execution the diagram should look like the following

3. Code Explanation

Let’s break down the PlantUML code step by step:

Macros Definition:

- Primary Key Macro (

primary_key): This macro defines the styling for primary key columns. It uses HTML formatting to display the key icon in bold and a specified color (#b8861b).Example:primary_key(id): UUID <<PK>> - Foreign Key Macro (

foreign_key): Similar to the primary key macro, this defines the styling for foreign key columns. It displays the key icon in a specified color (#aaaaaa).Example:foreign_key(author_id): UUID <<FK>> - Column Macro (

column): This macro defines the styling for regular columns. It displays a media-record icon in a specified color (#efefef).Example:column(title): VARCHAR(255) NOT NULL - Table Macro (

table): This macro defines the styling for tables. It sets the background color to white.Example:table(Posts) { ... }

Table Definitions:

- Posts Table (

Posts): This table represents blog posts. It contains columns for primary key (id), title (title), slug (slug), content (content), excerpt (excerpt), publish date (publish_date), and a boolean flag for publication status (is_published). Theauthor_idcolumn is a foreign key referencing theAuthorstable. - Authors Table (

Authors): This table stores information about blog authors. It includes columns for primary key (id), username (username), email (email), and password (password). Bothusernameandemailare marked as unique to ensure data integrity. - Comments Table (

Comments): This table contains comments left by users on blog posts. It includes columns for primary key (id), foreign key referencing thePoststable (post_id), author name (author_name), author email (author_email), content (content), and comment date (comment_date). - Tags Table (

Tags): This table stores tags associated with blog posts. It includes columns for primary key (id) and tag name (name). Thenamecolumn is marked as unique to ensure each tag is unique. - PostTags Table (

PostTags): This table serves as a join table to establish a many-to-many relationship between posts and tags. It includes composite primary key (post_id, tag_id) and foreign keys referencing thePostsandTagstables, respectively.

Relationships:

- Posts – Authors: This relationship indicates that each post is authored by one author (one-to-many). The

author_idcolumn in thePoststable references theidcolumn in theAuthorstable. - Posts – Comments: This relationship signifies that each post can have multiple comments (one-to-many). The

post_idcolumn in theCommentstable references theidcolumn in thePoststable. - Posts – Tags: This relationship represents a many-to-many relationship between posts and tags facilitated by the

PostTagsjoin table. - Tags – Posts: This relationship mirrors the many-to-many relationship between posts and tags, also facilitated by the

PostTagsjoin table.

Conclusion:

In this tutorial, we’ve explored the PlantUML code for modeling the database structure of a blog. By understanding the macros, table definitions, and relationships within the code, you’ll be better equipped to visualize and customize your database model.

by Alexius Dionysius Diakogiannis at March 08, 2024 05:17 PM

March 04, 2024

Docker-compose: ports vs expose explained

by F.Marchioni at March 04, 2024 03:37 PM

Docker-compose allows you to access container ports in two different ways: using “ports” and “expose:”. In this tutorial we will learn what is the difference between “ports” and “expose:” providing clear examples. Before diving into the differences between “ports” and “expose”, we recommend checking this article if you are new to Docker-Compose: Orchestrate containers using ... Read more

The post Docker-compose: ports vs expose explained appeared first on Mastertheboss.

March 01, 2024

Getting started with Jakarta EE

by F.Marchioni at March 01, 2024 07:47 AM

This article will detail how to get started quickly with Jakarta EE which is the new reference specification for Java Enterprise API. As most of you probably know, the Java EE moved from Oracle to the Eclipse Foundation under the Eclipse Enterprise for Java (EE4J) project. There are already a list of application servers which ... Read more

The post Getting started with Jakarta EE appeared first on Mastertheboss.

February 25, 2024

How to run WildFly on Openshift

by F.Marchioni at February 25, 2024 08:42 AM

This tutorial will teach you how to run WildFly applications on Openshift using WildFly S2I images. At first, we will learn how to build and deploy applications using Helm Charts. Then, we will learn how to use the S2I legacy approach which relies on ImageStreams and Templates. WildFly Cloud deployments There are two main strategies ... Read more

The post How to run WildFly on Openshift appeared first on Mastertheboss.

February 07, 2024

FetchType: Lazy/Eager loading for Hibernate & JPA

by Thorben Janssen at February 07, 2024 12:52 PM

The post FetchType: Lazy/Eager loading for Hibernate & JPA appeared first on Thorben Janssen.

Choosing the right FetchType is one of the most important decisions when defining your entity mapping. It specifies when your JPA implementation, e.g., Hibernate, fetches associated entities from the database. You can choose between EAGER and LAZY loading. The first one fetches an association immediately, and the other only when you use it. I explain...

The post FetchType: Lazy/Eager loading for Hibernate & JPA appeared first on Thorben Janssen.

February 03, 2024

How to find slow SQL queries with Hibernate or JPA

by F.Marchioni at February 03, 2024 09:45 PM

This article will teach you which are the best strategies to detect slow SQL statements when using an ORM such as Hibernate or its Enterprise implementation which is Jakarta Persistence API. Step 1: Learn how to debug SQL Statements Data persistence is one of the key factors when it comes to application performance. Therefore, it’s ... Read more

The post How to find slow SQL queries with Hibernate or JPA appeared first on Mastertheboss.

February 01, 2024

How to: Apache Server as a Load Balancer for your GlassFish Cluster

by Ondro Mihályi at February 01, 2024 10:45 PM

How to: Apache Server as a Load Balancer for your GlassFish Cluster

Setting up a GlassFish server cluster with an Apache HTTP server as a load balancer involves several steps:

- Configuring GlassFish for clustering

- Setting up the Apache HTTP Server as a load balancer

- Enabling sticky sessions for session persistence

We’ll assume that you’ve already configured GlassFish for clustering. If you haven’t done so, you can follow our guide on how to set up a GlassFish cluster to prepare your own GlassFish cluster. After you’ve prepared it, this tutorial will guide you to configure Apache HTTP Server as a load balancer with sticky sessions.

Step 1: Install Apache HTTP Server

Install Apache HTTP Server on a machine that will act as the load balancer. This should be a separate machine from machines that run GlassFish cluster instances, mainly for security reasons. While your cluster should be accessible through the Apache server, GlassFish cluster instances shouldn’t be accessible publicly. Therefore, you should configure your firewall or networking rules to only allow access to GlassFish instances only from the Apache server.

On Apt-based systems, like Ubuntu or Debian, you can install Apache HTTP Server from the system repository with the following commands:

sudo apt update

sudo apt install apache2Step 2: Enable Proxy Modules in Apache HTTP Server

Enable the necessary proxy modules (proxy, proxy_http, proxy_balancer, and lbmethod_byrequests modules) in Apache server:

sudo a2enmod proxy

sudo a2enmod proxy_http

sudo a2enmod proxy_balancer

sudo a2enmod lbmethod_byrequestsStep 3: Configure the load balancer mechanism

Edit the Apache configuration file (usually located at /etc/apache2/sites-available/000-default.conf) and add the following configuration:

<VirtualHost *:80>

# Basic server configuration, use appropriate values according to your set up

ServerAdmin webmaster@localhost

ServerName yourdomain.com

# access and error log files

ErrorLog ${APACHE_LOG_DIR}/error.log

CustomLog ${APACHE_LOG_DIR}/access.log combined

# Forward requests through the load balancer named "balancer://glassfishcluster/"

# Uses the JSESSIONID cookie to stick the sesstion to an appropriate GlassFish instance

ProxyPass / balancer://glassfishcluster/ stickysession=JSESSIONID failontimeout=On

ProxyPassReverse / balancer://glassfishcluster/

# Configuration of the load balancer and GlassFish instances connected to it

<Proxy balancer://glassfishcluster>

ProxySet lbmethod=byrequests

BalancerMember http://glassfish1:28080 route=instance1 timeout=10 retry=60

BalancerMember http://glassfish2:28080 route=remoteInstance timeout=10 retry=60

# Add more BalancerMembers for additional GlassFish instances

</Proxy>

</VirtualHost>Replace the following according to your actual setup:

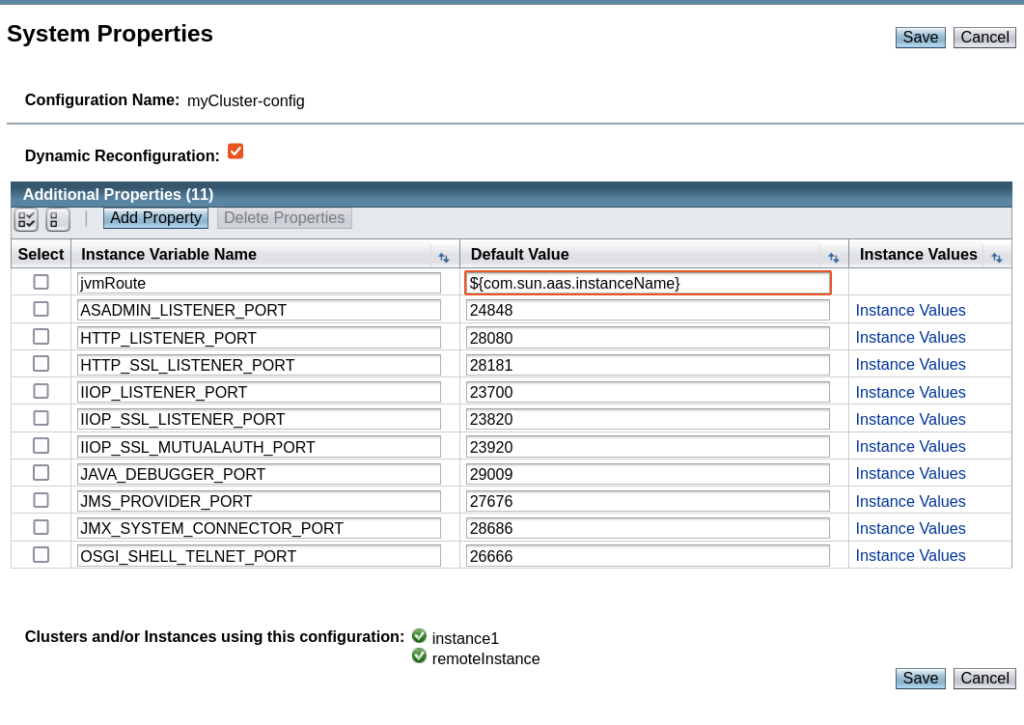

yourdomain.com– the domain name of your application (the DNS record should point to the Apache server)glassfish1– hostname or IP address of your GlassFish instance with nameinstance1glassfish2– hostname or IP address of your GlassFish instance with nameremoteInstanceinstance1,remoteInstance– names of your GlassFish instances. If you use different names, adjust them here and make sure that thejvmRoutesystem property on GlassFish instances is set to the same instance name

Note that:

- GlassFish instances must be configured with the jvmRoute system property to add GlassFish instance name to the session cookie

- Values in the

routearguments ofBalancerMembermust be GlassFish instance names matching that member (values set by thejvmRoutesystem property). The value of thejvmRoutesystem property should be defined in the GlassFish cluster configuration to the value of${com.sun.aas.instanceName}to reflect the GlassFish instance name. This value will then be added to the session cookie so that the Apache loadbalancer can match a cookie with the right GlassFish instance. - With the configuration

failontimeout=On, the load balancer waits at most 10 seconds for the request to complete. If it takes longer, it considers the GlassFish instance as unresponsive and fails over to another instance to process the request. This is to consider GlassFish instances that are stuck (e.g. when out of available memory) as inactive. If some requests take more time to complete, either disable this option, or increase thetimeoutarguments on BalancerMember - The example configuration doesn’t contain HTTPS configuration. We strongly recommend using HTTPS for the Apache virtual host in production, signed with an SSL/TLS certificate, and redirect all plain HTTP requests to equivalent HTTPS requests

- If you use a different session cookie name, replace

JSESSIONIDwith your custom cookie name

Step 4: Verify firewall configuration

Verify that:

- Apache server can access the HTTP port of each GlassFish instance (port 28080 by default )

- GlassFish instances should not be accessible from a public network for security reasons, they should only be accessible from the Apache server

Step 5: Restart Apache HTTP Server

Restart Apache to enable the new modules and apply the configuration changes.

On an operating system that uses SystemD services (e.g. Ubuntu):

sudo systemctl restart apache2Step 6: Verify the Sticky Session routing

Test your setup by accessing your application through the Apache load balancer. Verify that sticky sessions are working, ensuring that requests within the same session are directed to the same GlassFish instance and requests without a session are routed to a random instance.

You can use the test cluster application available at https://github.com/OmniFish-EE/clusterjsp/releases:

Summary

With these steps, you should have a basic setup of a GlassFish server cluster

- with an Apache HTTP Server acting as a load balancer

- sticky session routing for session persistence across requests

In case of an issue with a GlassFish instance, the load balancer should stop sending requests to that instance and keep using the remaining GlassFish instances in the cluster. Once the GlassFish instance recovers from issues, it will rejoin the load balancer and will start receiving requests again.

You can also rely on this mechanism when you need to restart the cluster; instead of restarting the whole cluster at once, you can restart GlassFish instances one by one. While one of the instances is being restarted, Apache server will continue sending requests to the other instances.

Getting started with Quarkus and Hibernate

by F.Marchioni at February 01, 2024 06:20 PM

In this article we will learn how to create and run a sample Quarkus 3 application which uses Hibernate ORM and JPA. We will create a sample REST Endpoint to expose the basic CRUD operations against a relational Database such as PostgreSQL. Create the Quarkus Project Firstly, create a basic Quarkus project first. For example, ... Read more

The post Getting started with Quarkus and Hibernate appeared first on Mastertheboss.

Solving 0/1 nodes are available: 1 Insufficient memory

by F.Marchioni at February 01, 2024 07:17 AM

The error message “0/1 nodes are available: 1 Insufficient memory. preemption: 0/1 nodes are available: 1 No preemption victims found for incoming pod.” indicates your pod can’t be scheduled due to memory constraints. Here are the steps to address this issue- Investigate Memory Usage Firstly, check the current Memory Usage of the OpenShift Node where ... Read more

The post Solving 0/1 nodes are available: 1 Insufficient memory appeared first on Mastertheboss.

January 29, 2024

How to: Set up a GlassFish Cluster

by Ondro Mihályi at January 29, 2024 05:04 PM

This tutorial will guide you to set up a GlassFish cluster that serves a single application on multiple machines.

This setup doesn’t enable HTTP session replication. Session data will be available only on the original cluster instance that created it. Therefore, if you access the cluster instances through a load balancer server, you should enable sticky session support on the load balancer server. With this mechanism, a single user will be always served by the same GlassFish instance, different users (HTTP sessions) may be served by different GlassFish instances. It’s also possible to configure session replication in a GlassFish cluster so that each session is available on each instance but this is not covered by this tutorial.

- Step 1: Start the Default Domain

- Step 2: Create a Cluster

- Step 3: Add routing config for sticky sessions

- Step 4: Start the cluster

- Step 5: test the Cluster

- Step 6: Add an SSH Node

- Step 7: Create an Instance on the SSH Node

- Step 8: Start the remote instance

- Step 9: Verify Load Balancing

- Step 10: Deploy your application to the cluster

- Summary

- See also

Step 1: Start the Default Domain

- Open a terminal and navigate to the

bindirectory of your GlassFish installation. - Start the default domain:

asadmin start-domain- Open GlassFish Admin Console, which is running on http://localhost:4848 by default. If you access the Admin Console from a remote machine, you need enable the secure administration first and then access Admin Console via HTTPS, e.g. https://glassfish-server.company.com:4848. To enable secure administration, refer to the Eclipse GlassFish Security Guide.

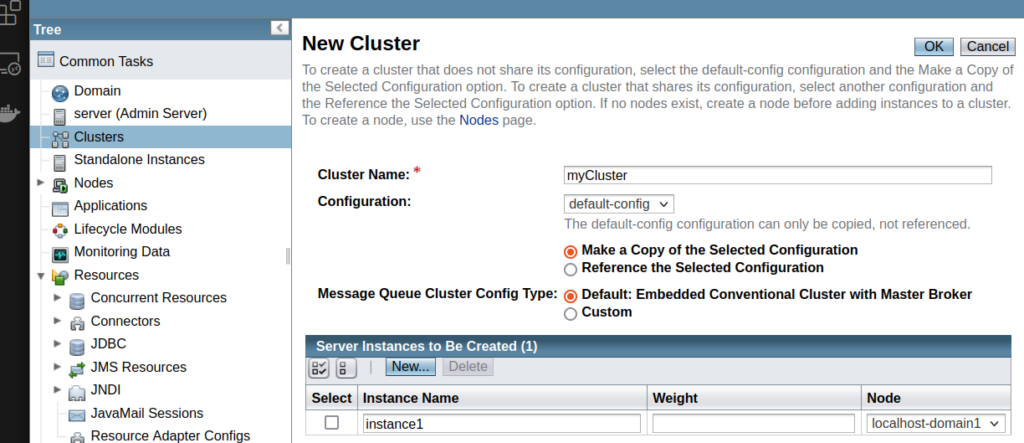

Step 2: Create a Cluster

- Navigate to “Clusters”

- Click on the “New…” button to create a new cluster.

- Enter a name for the cluster (e.g.,

myCluster) - In the “Server Instances to Be Created” table, click “New…”, and then fill in

instance1as an instance name, keep “Node” selected to the default “localhost-domain1” - Click “OK”

This will create a clustering configuration myCluster in GlassFish, with one GlassFish server instance instance1, which runs on the same machine as the GlassFish administration server (DAS).

Step 3: Add routing config for sticky sessions

- Navigate to

Clusters → myCluster - Click on the “myCluster-config” link in the “Configuration” field

- Click on “System Properties” to open the “System Properies” configuration for the cluster

- Click on “Add Property” button

- In the new row, set the value in the “Instance Variable Name” column to “jvmRoute”

- Set “Default Value” to “${com.sun.aas.instanceName}” so that it’s set to the actual instance name for every cluster instance

This configuration is needed to simplify the sticky session routing mechanism in load balancers, e.g. in Apache HTTP server.

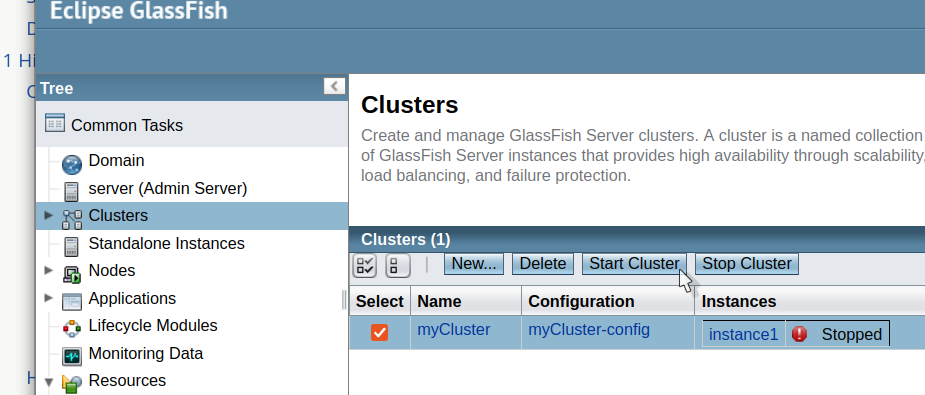

Step 4: Start the cluster

- Navigate to “Clusters”

- Click the checkbox in the “Select” column next to your cluster

- Click “Start Cluster” button and wait until the cluster is started

- Navigate to http://DOMAIN_NAME:28080 (e.g. http://localhost:28080), where DOMAIN_NAME is the same as in the URL of your DAS server (e.g. localhost)

This will navigate you to the welcome page of the instance1 GlassFish instance running on port 28080.

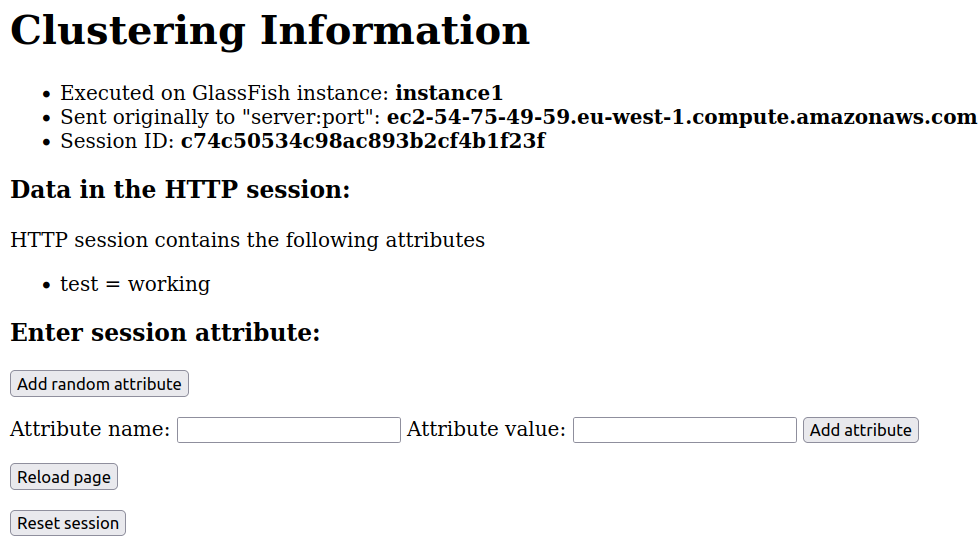

Step 5: test the Cluster

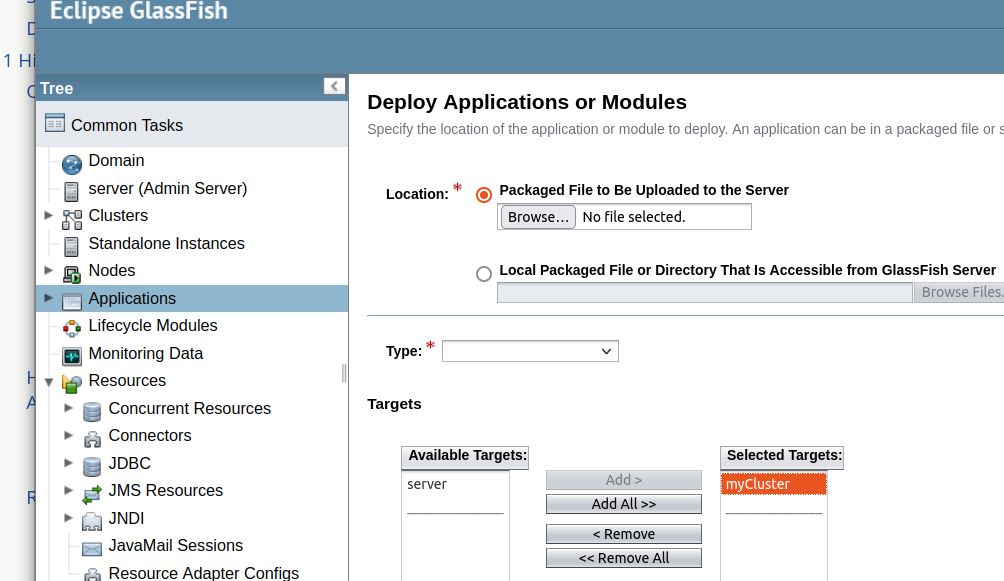

Deploy a cluster tester application, which you can download from https://github.com/OmniFish-EE/clusterjsp/releases.

- In GlassFish Admin Console, navigate to

Applicationsand click the “Deploy…”button - In the “Targets” section, click on your cluster in the “Available Targets” column and click the “Add >” button

- In the “Location” section, click

Browse…and select the application WAR file - Set the “Context Root” field to “cluster”

- Click “OK”

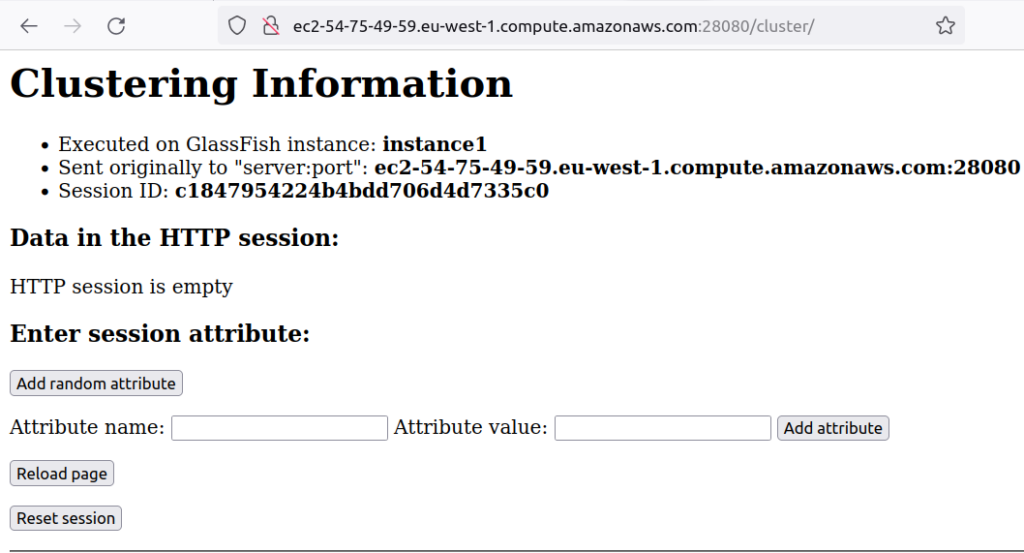

- Navigate to http://DOMAIN_NAME:28080/cluster (e.g. http://localhost:28080/cluster)

Right now, there’s a single instance in the cluster. All requests are handled by that instance, session data is always present, and all should work as expected. As you’ll add more instances to the cluster, you can use this tester application again to verify that all works even if requests are sent to different instances.

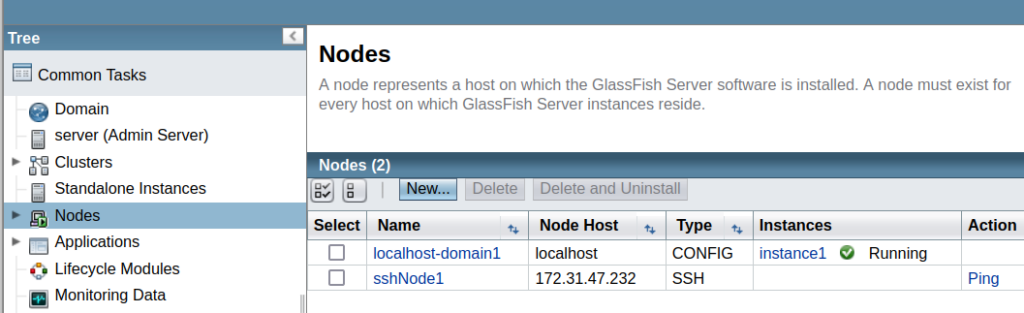

Step 6: Add an SSH Node

Now, add a SSH connection to a remote server, where you want to run other GlassFish cluster instances.

This assumes that you already have a remote machine with SSH server and Java installed.

Make sure that GlassFish admin server can access the remote machine on the SSH port (port 22 by default). Then:

- In GlassFish Admin Console, navigate to

Nodes. There’s always at least 1 node, e.g.localhost-domain1, which represents the local machine - Click on the “New…” button to add a new SSH node.

- Enter a name for the SSH node (e.g.,

sshNode1). - Set the “Type” to “SSH”

- Set the “Host” to the IP address of the remote machine.

- If you want to install GlassFish on the remote machine, enable the ckeckbox “Install GlassFish Server”

- Set the “SSH User” to a user with sufficient privileges on the remote machine. Set to `${user.name} if it’s the same user as the one running the GlassFish admin server

- Set the “SSH User Authentication” based on your authentication method. Fill in the authentication details, e.g. “SSH User Password” for “Password” authentication

- Click “OK” to add the SSH node.

Step 7: Create an Instance on the SSH Node

- Navigate to

Clusters → myCluster. - Select the “Instances” tab.

- Click on the “New…” button to create a new instance.

- Enter a name for the new instance (e.g.,

remoteInstance) and select the SSH node (sshNode1) as the target “Node”. - Click “OK” to create the instance.

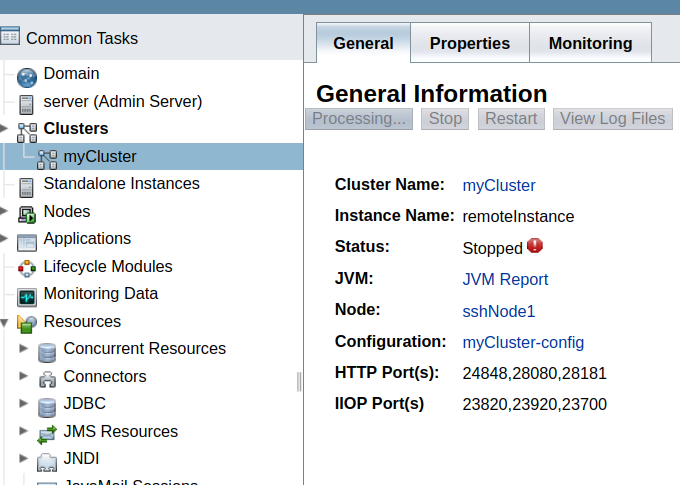

Make sure that the admin port of the remote instance is open for connections from GlassFish admin server and isn’t blocked by a firewall. The port number is 24848 by default. You can find it in GlassFish Admin Console:

- Navigate to

Clusters → myCluster, the tab “Instances” - Click on “remoteInstance” in the “Name” column

- The admin port number is the first port in the “HTTP Port(s)” field

Without this, the admin server will be able to start the instance via SSH but will not be able to communicate with it or detect that it is running.

Step 8: Start the remote instance

To start the new remote instance, you can start the cluster again, as you already did before. Starting a cluster if you already started it before will keep the “instance1” instance running, and will start all other instances which are not running.

- Navigate to “Clusters”

- Click the checkbox in the “Select” column next to your cluster

- Click “Start Cluster” button and wait until the cluster is started

Alternatively, you can start the remoteInstance individually:

- Navigate to the “Instances” tab

- Select

remoteInstanceand click on the “Start” button.

Step 9: Verify Load Balancing

This assumes that you’ve already set up a load balancer server, e.g. Apache HTTP server, and the load balancer is configured to support sticky sessions.

Access the tester application you deployed previously through the load balancer and verify that requests are load-balanced between the instances:

http://load-balancer-ip:load-balancer-port/clusterReplace load-balancer-ip and load-balancer-port with the appropriate values for your load balancer server.

- Verify that after an HTTP session is created, your requests are served by the same GlassFish instance and your session data remains in the session.

- After you reset the session, it’s possible that your future requests will be served by a different GlassFish instance

After everything is working, you can undeploy the test application clusterjsp and deploy your own application. Remember to select your cluster as the deployment target, so that your application is deployed to all GlassFish instances in the cluster.

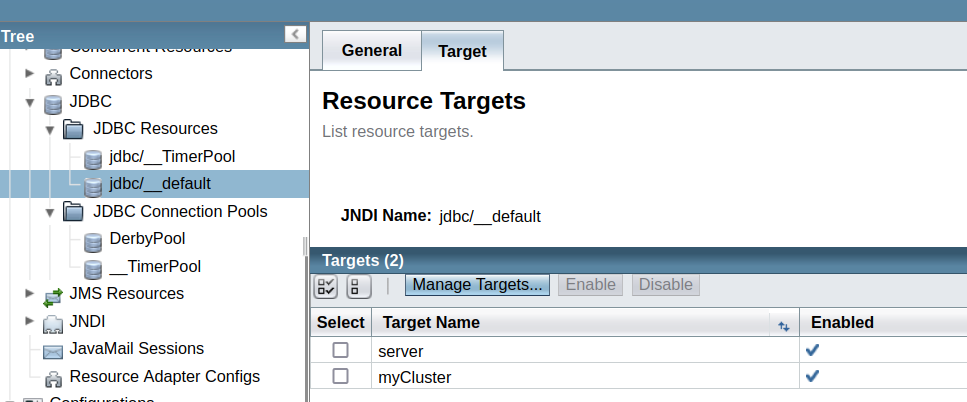

Step 10: Deploy your application to the cluster

Before you deploy your application to the cluster, make sure that all resources that your application requires are deployed to the cluster too. For example, a JDBC resource:

Now, deploy your application to the cluster, similarly as you deployed the tester application before in the Step 5:

- In GlassFish Admin Console, navigate to

Applicationsand click the “Deploy…”button - In the “Targets” section, click on your cluster in the “Available Targets” column and click the “Add >” button

- Configure other deployment properties and click “OK”

Summary

That’s it! You have now created a GlassFish cluster with instances, added an SSH node, and created an instance on this node using the Admin Console. Your application is running on all instances of the cluster. Now you can set up a load balancer server (e.g. Apache HTTP Server) with sticky sessions to proxy incoming requests to GlassFish instances, which we’ll cover in a future article.

See also

Last updated 2024-01-28 13:40:07 +0100

January 10, 2024

Monitoring Java Virtual Threads

by Jean-François James at January 10, 2024 05:14 PM

December 21, 2023

Introduction to PlantUML: Unleashing the Power of Visual Representation as a Code in Software Development

by Alexius Dionysius Diakogiannis at December 21, 2023 09:29 PM

Introduction

In the fast-paced world of software development, effective communication and clear documentation are paramount. This is where the power of visual representation comes into play, and one tool that has significantly simplified this process is PlantUML. This open-source project has revolutionized the way developers, project managers, and analysts create and share diagrams. It’s not just a tool; it’s a visual language that transforms the way we think about and document software architecture, processes, and workflows.

PlantUML stands out for its simplicity and efficiency. At its core, it’s a scripting language for creating diagrams. Unlike conventional diagramming tools that rely on a graphical interface, PlantUML allows users to describe diagrams using an intuitive and straightforward textual description. This text-based approach means diagrams can be easily version-controlled, shared, and edited, making collaboration seamless. PlantUML supports various types of diagrams, including sequence diagrams, use case diagrams, class diagrams, activity diagrams, component diagrams, state diagrams, and more, making it a versatile tool in the software development toolkit.

The importance of diagrams in software development and documentation cannot be overstated. They are the bridge between abstract concepts and their practical implementation. Diagrams provide a bird’s-eye view of complex systems, making it easier to understand, design, and communicate intricate software architecture and processes. They are essential for planning, explaining decisions, and onboarding new team members. In the realm of software engineering, where complexity is a given, diagrams are the lingua franca that ensures everyone, from developers to stakeholders, is on the same page.

This blog post is dedicated to exploring the depths of PlantUML with a particular focus on three key areas: sequence diagrams, component diagrams, and advanced theming.

- Sequence Diagrams: We will delve into sequence diagrams, a type of interaction diagram that shows how objects operate with one another and in what order. These diagrams are vital for visualizing the sequence of messages flowing from one object to another, crucial in understanding system functionality and debugging.

- Component Diagrams: We will explore component diagrams, which are especially useful in illustrating the organization and dependencies among a set of components. These include databases, user interfaces, systems, and more, making them indispensable in understanding and documenting system architecture.

- Advanced Theming: Finally, we’ll take a deep dive into the advanced theming capabilities of PlantUML. Customization of diagrams is not only about aesthetics; it’s about enhancing clarity, emphasizing key components, and tailoring diagrams to different audiences. We’ll explore how to apply custom themes and styles to make your diagrams more informative and visually appealing.

Throughout this blog post, we aim to provide you with comprehensive knowledge, practical examples, and best practices to harness the full potential of PlantUML. Whether you’re a seasoned developer or just starting out, understanding how to effectively use PlantUML can elevate your documentation and improve your project’s communication and efficiency. Let’s embark on this journey to master the art of diagramming with PlantUML.

Sequence Diagrams with PlantUML

Introduction to Sequence Diagrams

Sequence diagrams, integral to the Unified Modeling Language (UML), offer a dynamic modeling solution crucial in software development. They provide a clear visualization of how objects in a system interact over time, making them indispensable for understanding operational workflows and object interactions. These diagrams are particularly useful in depicting the sequence of messages and events between various parts of a system, which is essential for comprehending the flow of control and data within complex software architectures. By representing different entities as lifelines and their interactions as messages, sequence diagrams facilitate a detailed and temporal view of a system’s functionality, enabling developers and analysts to trace the sequence of events and interactions from start to end.

Creating a Basic Sequence Diagram

To create a basic sequence diagram in PlantUML, you start by defining the participants or objects involved. For instance, in a user authentication process, the primary actors might include a ‘User,’ an ‘Authenticator,’ and a ‘Database.’

Here’s a simple PlantUML script to illustrate this process:

@startuml actor User participant Authenticator database Database User -> Authenticator: Request Login Authenticator -> Database: Validate Credentials Database -> Authenticator: Credentials Valid Authenticator -> User: Authentication Result @enduml

The output is:

This script creates a sequence diagram where the ‘User’ sends a login request to the ‘Authenticator,’ which then interacts with the ‘Database’ to validate credentials. The database responds, and the authenticator communicates the result back to the user. Each arrow represents a message or interaction, with the direction indicating the flow.

Advanced Features in Sequence Diagrams

PlantUML allows the addition of advanced features to sequence diagrams, such as loops, conditions, and concurrency. These elements add depth to the representation, making it possible to depict more complex scenarios.

For example, to introduce a condition in the authentication process, where the system retries the validation if credentials are incorrect, you can use an alt frame:

@startuml

actor User

participant Authenticator

database Database

loop Authentication Attempt

User -> Authenticator: Request Login

Authenticator -> Database: Validate Credentials

alt Credentials Valid

Database -> Authenticator: Success

Authenticator -> User: Authentication Successful

else Credentials Invalid

Database -> Authenticator: Failure

Authenticator -> User: Retry Login

end

end loop

@enduml

The output is:

In this enhanced diagram, the alt frame introduces a conditional operation. If the credentials are valid, the process ends with a success message. If not, it prompts the user to retry login, and the loop continues until successful authentication.

Component Diagrams in PlantUML

Introduction to Component Diagrams

Component diagrams are a cornerstone of software architecture visualization. These diagrams illustrate the modular structure of a system, highlighting how various parts, such as databases, user interfaces, or even entire subsystems, interrelate and interact. In PlantUML, crafting component diagrams is an art that combines technical accuracy with clarity. This section focuses on the creation of component diagrams, starting from basic concepts and progressively incorporating more complexity, including composite components.

Creating a Basic Component Diagram

Creating a component diagram in PlantUML begins with defining the system’s primary components. Let’s consider a simple web application comprising a Web Server, an Application Logic component, and a Database.

In PlantUML, this can be represented as:

@startuml component [Web Server] component [Application Logic] database [Database] [Web Server] --> [Application Logic] [Application Logic] --> [Database] @enduml

The output is:

This script represents a straightforward web application, where the Web Server communicates with the Application Logic, which in turn interacts with the Database. The arrows indicate the direction of communication between these components.

Incorporating Composite Components

As systems grow in complexity, you might need to represent composite components—components that encapsulate other components or groups of components. For instance, in a microservices architecture, a composite component can represent an entire service that consists of multiple smaller components.

Let’s expand our previous example to include composite components. Assume the Application Logic is now a composite component containing two subcomponents: ‘User Management’ and ‘Order Processing’.

@startuml

package "Web Application" {

component [Web Server]

package "Application Logic" {

component [User Management]

component [Order Processing]

}

database [Database]

}

[Web Server] --> [User Management]

[Web Server] --> [Order Processing]

[User Management] --> [Database]

[Order Processing] --> [Database]

@enduml

The output is:

In this enhanced diagram, ‘Application Logic’ is a composite component containing ‘User Management’ and ‘Order Processing’. The Web Server interacts separately with each subcomponent, while both subcomponents interact with the Database. This representation provides a clearer view of the system’s modular structure, showcasing how larger components are broken down into smaller, manageable parts.

In this enhanced diagram, ‘Application Logic’ is a composite component containing ‘User Management’ and ‘Order Processing’. The Web Server interacts separately with each subcomponent, while both subcomponents interact with the Database. This representation provides a clearer view of the system’s modular structure, showcasing how larger components are broken down into smaller, manageable parts.

Advanced Component Diagrams with PlantUML

For even more complex architectures, PlantUML allows you to depict dependencies, interfaces, and other intricate details. For example, in a microservices architecture, you might have services interacting via RESTful APIs or message queues.

Consider a scenario where the ‘Order Processing’ component communicates with an external ‘Payment Service’ via a REST API:

@startuml

package "Web Application" {

component [Web Server]

package "Application Logic" {

component [User Management]

component [Order Processing]

}

database [Database]

}

[Order Processing] ..> [Payment Service] : REST API

[Payment Service] ..> [Payment Gateway] : Uses

cloud {

[Payment Service]

[Payment Gateway]

}

[Web Server] --> [User Management]

[Web Server] --> [Order Processing]

[User Management] --> [Database]

[Order Processing] --> [Database]

@enduml

The output is:

In this complex diagram, the ‘Order Processing’ component within the Application Logic uses a REST API to communicate with an external ‘Payment Service’, which in turn uses a ‘Payment Gateway’. This level of detail is invaluable in large-scale distributed systems, providing a comprehensive view of component interactions and dependencies.

Advanced Theming in PlantUML

The Basics of Theming

Theming in PlantUML involves customizing the visual elements of diagrams, such as colors, fonts, and notes, to enhance readability and visual appeal. Effective theming can make complex diagrams more understandable and engaging. Basic theming involves setting global styles or individual element styles within the PlantUML script. For instance, you can change the color of components, background of notes, or the style of lines and arrows.

Here’s a simple example of applying basic theming to a sequence diagram:

@startuml skinparam sequenceArrowColor DeepSkyBlue skinparam sequenceActorBorderColor DarkSlateGray actor User participant Authenticator database Database User -> Authenticator: Request Login Authenticator -> Database: Validate Credentials Database -> Authenticator: Credentials Valid Authenticator -> User: Authentication Result @enduml

The output is:

In this script, sequenceArrowColor and sequenceActorBorderColor are used to customize the colors of the sequence diagram elements.

Advanced Theming Techniques

For advanced theming, PlantUML allows the creation of custom themes and styles using preprocessor directives and skin parameters. This feature is especially useful for applying consistent styling across multiple diagrams or for adhering to corporate branding guidelines.

To create a custom theme, you define a set of skin parameters in a separate file and then include this theme in your diagrams. For instance, you might have a ‘MyCustomTheme.iuml’ file with the following content:

!define MY_THEME_COLOR #3498db

skinparam backgroundColor #f0f0f0

skinparam ArrowColor MY_THEME_COLOR

skinparam ActorBorderColor MY_THEME_COLOR

skinparam component {

BackgroundColor #ecf0f1

ArrowColor MY_THEME_COLOR

}

You can then include this theme in your diagrams using the !include directive:

@startuml !include MyCustomTheme.iuml actor User participant Authenticator database Database ... // rest of the diagram @enduml

The final output is:

This approach allows for a high degree of customization and ensures consistent application of visual styles across different diagrams.

Practical Theming Example

Let’s apply a custom theme to the microservices architecture component diagram created earlier. Assuming the theme file ‘MicroserviceTheme.iuml’ contains specific color and style definitions, the enhanced diagram script will look like this:

@startuml

!include MicroserviceTheme.iuml

package "Microservices Architecture" {

[User Service]

[Order Service]

[Payment Service]

[Database]

}

interface "User API" as UserAPI

interface "Order API" as OrderAPI

interface "Payment API" as PaymentAPI

UserAPI ..> [User Service]

OrderAPI ..> [Order Service]

PaymentAPI ..> [Payment Service]

[User Service] --> [Database]: Reads/Writes

[Order Service] --> [Database]: Reads/Writes

[Payment Service] --> [Database]: Reads/Writes

@enduml

The final output is:

In this final version, the inclusion of the custom theme enhances the visual aesthetics and clarity of the diagram, making it more engaging and easier to interpret for the audience.

In this final version, the inclusion of the custom theme enhances the visual aesthetics and clarity of the diagram, making it more engaging and easier to interpret for the audience.

Conclusion

In this comprehensive guide, we’ve explored the power of PlantUML in creating sequence diagrams, component diagrams, and applying advanced theming. Whether you’re a developer, architect, or project manager, mastering these skills in PlantUML can significantly improve the clarity and effectiveness of your software documentation and architectural design. We encourage you to experiment with the examples provided, customize them to your needs, and explore the vast capabilities of PlantUML to elevate your diagramming skills to the next level.

by Alexius Dionysius Diakogiannis at December 21, 2023 09:29 PM

November 19, 2023

Coding Microservice From Scratch (Part 16) | JAX-RS Done Right! | Head Crashing Informatics 83

by Markus Karg at November 19, 2023 05:00 PM

Write a pure-Java microservice from scratch, without an application server nor any third party frameworks, tools, or IDE plugins — Just using JDK, Maven and JAX-RS aka Jakarta REST 3.1. This video series shows you the essential steps!

You asked, why I am not simply using the Jakarta EE 10 Core API. There are many answers in this video!

If you like this video, please give it a thumbs up, share it, subscribe to my channel, or become my patreon https://www.patreon.com/mkarg. Thanks!

November 04, 2023

Jersey Performance Improvement (Step One) | Code Review | Head Crashing Informatics 82

by Markus Karg at November 04, 2023 05:00 PM

Let’s take a deep dive into the source code of #Jersey (the heart of GlassFish, Payara and Helidon) to learn how we can make our own I/O code run faster on modern Java.

In this first step, we apply NIO APIs from #Java 7 and 8 to process data more efficiently, and most notably: outside of the JVM.

If you like this video, please give it a thumbs up, share it, subscribe to my channel, or become my patreon https://www.patreon.com/mkarg. Thanks!

October 12, 2023

Moving from javax to jakarta namespace

by Jean-Louis Monteiro at October 12, 2023 02:32 PM

This blog aims at giving some pointers in order to address the challenge related to the switch from `javax` to `jakarta` namespace. This is one of the biggest changes in Java of the latest 20 years. No doubt. The entire ecosystem is impacted. Not only Java EE or Jakarta EE Application servers, but also libraries of any kind (Jackson, CXF, Hibernate, Spring to name a few). For instance, it took Apache TomEE about a year to convert all the source code and dependencies to the new `jakarta` namespace.

This blog is written from the user perspective, because the shift from `javax` to `jakarta` is as impacting for application providers than it is for libraries or application servers. There have been a couple of attempts to study the impact and investigate possible paths to make the change as smooth as possible.

The problem is harder than it appears to be. The `javax` package is of course in the import section of a class, but it can be in Strings as well if you use the Java Reflection API for instance. Using Byte Code tools like ASM also makes the problem more complex, but also service loader mechanisms and many more. We will see that there are many ways to approach the problem, using byte code, converting the sources directly, but none are perfect.

Bytecode enhancement approach

The first legitimate approach that comes to our mind is the byte code approach. The goal is to keep the `javax` namespace as much as possible and use bytecode enhancement to convert binaries.

Compile time

It is possible to do a post treatment on the libraries and packages to transform archives such as then are converted to `jakarta` namespace.

- https://maven.apache.org/plugins/maven-shade-plugin/[Maven Shade plugin]

The Maven shade plugin has the ability to relocate packages. While the primary purpose isn’t to move from `javax` to `jakarta` package, it is possible to use it to relocate small libraries when they aren’t ready yet. We used this approach in TomEE itself or in third party libraries such as Apache Johnzon (JSONB/P implementation).

Here is an example in TomEE where we use Maven Shade Plugin to transform the Apache ActiveMQ Client library https://github.com/apache/tomee/blob/main/deps/activemq-client-shade/pom.xml

This approach is not perfect, especially when you have a multi module library. For Instance, if you have a project with 2 modules, A depends on B. You can use the shade plugin to convert the 2 modules and publish them using a classifier. The issue then is when you need A, you have to exclude B so that you can include it manually with the right classifier.

We’d say it works fine but for simple cases because it breaks the dependency management in Maven, especially with transitive dependencies. It also break IDE integration because sources and javadoc won’t match.

- https://projects.eclipse.org/projects/technology.transformer[Eclipse Transformer]

The Eclipse Transformer is also a generic tool, but it’s been heavily developed for the `javax` to `jakarta` namespace change. It operates on resources such as

Simple resources:

- Java class files

- OSGi feature manifest files

- Properties files

- Service loader configuration files

- Text files (of several types: java source, XML, TLD, HTML, and JSP)

Container resources:

- Directories

- Java archives (JAR, WAR, RAR, and EAR files)

- ZIP archives

It can be configured using Java Properties files to properly convert Java Modules, classes, test resources. This is the approach we used for Apache TomEE 9.0.0-M7 when we first tried to convert to `jakarta`. It had limitation, so we had to then find tricks to solve issues. As it was converting the final distribution and not the individual artifacts, it was impossible for users to use Arquillian or the Maven plugin. They were not converted.

- https://github.com/apache/tomcat-jakartaee-migration[Apache Tomcat Migration tool]

This tool can operate on a directory or an archive (zip, ear, jar, war). It can migrate quite easily an application based on the set of specifications supported in Tomcat and a few more. It has the notion of profile so that you can ask it to convert more.

You can run it using the ANT task (within Maven or not), and there is also a command line interface to run it easily.

Deploy time

When using application server, it is sometimes possible to step in the deployment process and do the conversion of the binaries prior to their deployment.

- https://github.com/apache/tomcat-jakartaee-migration[Apache Tomcat/TomEE migration tool]

Mind that by default, the tool converts only what’s being supported by Apache Tomcat and a couple of other APIs. It does not convert all specifications supported in TomEE, like JAX RS for example. And Tomcat does not provide yet any way to configure it.

Runtime

We haven’t seen any working solution in this area. Of course, we could imagine a JavaAgent approach that converts the bytecode right when it gets loaded by the JVM. The startup time is seriously impacted, and it has to be done every time the JVM restarts or loads a class in a classloader. Remember that a class can be loaded multiple times in different classloaders.

Source code enhancement approach

This may sound like the most impacting but this is probably also the most secured one. We also strongly believe that embracing the change sooner is preferable rather than later. As mentioned, this is one of the biggest breaking change in Java of the last 20 years. Since Java EE moved to Eclipse to become Jakarta, we have noticed a change in the release cadence. Releases are not more frequent and more changes are going to happen. Killing the technical depth as soon as possible is probably the best when it’s so impacting.

There are a couple of tools we tried. There are probably more in the ecosystem, and also some in-house developments.

[IMPORTANT]

This is usually a one shoot operation. It won’t be perfect and no doubt it will require adjustment because there is no perfect tool that can handle all cases.

IntelliJ IDEA

IntelliJ IDEA added a refactoring capability to its IDE in order to convert sources to the new `jakarta` namespace. I haven’t tested it myself, but it may help to do the first big step when you don’t really master the scripting approach below.

Scripting approach

For simple case, and we used that approach to do most of the conversion in TomEE, you can create your own simple tool to convert sources. For instance, SmallRye does that with their MicroProfile implementations. Here is an example https://github.com/smallrye/smallrye-config/blob/main/to-jakarta.sh

Using basic Linux commands, it converts from `javax` to `jakarta` namespace and then the result is pushed to a dedicated branch. The benefit is that they have 2 source trees with different artifacts, the dependency management isn’t broken.

One source tree is the reference and they add to the script the necessary commands to convert additional things on demand.

- https://projects.eclipse.org/projects/technology.transformer[Eclipse Transformer]

Because the Eclipse Transformer can operate on text files, it can be easily used to migrate the sources from `javax` to `jakarta` namespace.

Producing converted artifacts for applications for consumption

Weather you are working on Open Source or not, someone will consume your artifacts. If you are using Maven for example, you may ask yourself what option is the best especially if you maintain the 2 branches `javax` and `jakarta`.

[NOTE]

It does not matter if you use the bytecode or the source code approach.

Updating version or artifactId

This is probably the more practical solution. Some project like Arquillian for example decided to go using a different artifact name (-jakarta suffix) because the artifact is the same and solves the same problem, so why bringing a technical concerned into the name? I’m more in favor of using the version to mark the namespace change. It is somehow an major API change that I’d rather emphasize using a major version update.

[IMPORTANT]

Mind that this only works if both `javax` and `jakarta` APIs are backward compatible. Otherwise, it won’t work

Using Maven classifiers

This is not an option we would recommend. Unfortunately some of our dependencies use this approach and it has many drawbacks. It’s fine for a quick test, but as I mentioned previously, it badly impacts how Maven works. If you pull a transformed artifact, you may get a transitive and not transformed dependency. This is the case for multi module project as well.

Another painful side effect is that javadoc and sources are still linked to the original artifact, so you will have a hard time to debug in the IDE.

Conclusion

We tried the bytecode approach ourselves in TomEE with the hope we could avoid maintaining 2 source trees, one for `javax` and the other one for `jakarta` namespace. Unfortunately, as we have seen before the risk is too important and there are too many edge cases not covered. Apache TomEE runs about 60k tests (including TCK) and our confidence wasn’t good enough. Even though the approach has some benefits and can work for simple use cases, like converting a small utility tool, it does not fit in our opinion for real applications.

The post Moving from javax to jakarta namespace appeared first on Tomitribe.

October 02, 2023

Choosing Connector in Jersey

by Jan at October 02, 2023 01:49 PM

September 27, 2023

Navigating the Shift From Drupal 7 to Drupal 9/10 at the Eclipse Foundation

September 27, 2023 02:30 PM

We’re currently in the middle of a substantial transition as we are migrating mission-critical websites from Drupal 7 to Drupal 9, with our sights set on Drupal 10. This shift has been motivated by several factors, including the announcement of Drupal 7 end-of-life which is now scheduled for January 5, 2025, and our goal to reduce technical debt that we accrued over the last decade.

To provide some context, we’re migrating a total of six key websites:

- projects.eclipse.org: The Eclipse Project Management Infrastructure (PMI) consolidates project management activities into a single consistent location and experience.

- accounts.eclipse.org: The Eclipse Account website is where our users go to manage their profiles and sign essential agreements, like the Eclipse Contributor Agreement (ECA) and the Eclipse Individual Committer Agreement (ICA).

- blogs.eclipse.org: Our official blogging platform for Foundation staff.

- newsroom.eclipse.org: The Eclipse Newsroom is our content management system for news, events, newsletters, and valuable resources like case studies, market reports, and whitepapers.

- marketplace.eclipse.org: The Eclipse Marketplace empowers users to discover solutions that enhance their Eclipse IDE.

- eclipse.org/downloads/packages: The Eclipse Packaging website is our platform for managing the publication of download links for the Eclipse Installer and Eclipse IDE Packages on our websites.

The Progress So Far

We’ve made substantial progress this year with our migration efforts. The team successfully completed the migration of Eclipse Blogs and Eclipse Newsroom. We are also in the final stages of development with the Eclipse Marketplace, which is currently scheduled for a production release on October 25, 2023. Next year, we’ll focus our attention on completing the migration of our more substantial sites, such as Eclipse PMI, Eclipse Accounts, and Eclipse Packaging.

More Than a Simple Migration: Decoupling Drupal APIs With Quarkus

This initiative isn’t just about moving from one version of Drupal to another. Simultaneously, we’re undertaking the task of decoupling essential APIs from Drupal in the hope that future migration or upgrade won’t impact as many core services at the same time. For this purpose, we’ve chosen Quarkus as our preferred platform. In Q3 2023, the team successfully migrated the GitHub ECA Validation Service and the Open-VSX Publisher Agreement Service from Drupal to Quarkus. In Q4 2023, we’re planning to continue down that path and deploy a Quarkus implementation of several critical APIs such as:

- Account Profile API: This API offers user information, covering ECA status and profile details like bios.

- User Deletion API: This API monitors user deletion requests ensuring the right to be forgotten.

- Committer Paperwork API: This API keeps tabs on the status of ongoing committer paperwork records.

- Eclipse USS: The Eclipse User Storage Service (USS) allows Eclipse projects to store user-specific project information on our servers.

Conclusion: A Forward-Looking Transition

Our migration journey from Drupal 7 to Drupal 9, with plans for Drupal 10, represents our commitment to providing a secure, efficient, and user-friendly online experience for our community. We are excited about the possibilities this migration will unlock for us, advancing us toward a more modern web stack.

Finally, I’d like to take this moment to highlight that this project is a monumental team effort, thanks to the exceptional contributions of Eric Poirier and Théodore Biadala, our Drupal developers; Martin Lowe and Zachary Sabourin, our Java developers implementing the API decoupling objective; and Frederic Gurr, whose support has been instrumental in deploying our new apps on the Eclipse Infrastructure.

September 20, 2023

New Jetty 12 Maven Coordinates

by Joakim Erdfelt at September 20, 2023 09:42 PM

Now that Jetty 12.0.1 is released to Maven Central, we’ve started to get a few questions about where some artifacts are, or when we intend to release them (as folks cannot find them).

Things have change with Jetty, starting with the 12.0.0 release.

First, is that our historical versioning of <servlet_support>.<major>.<minor> is no longer being used.

With Jetty 12, we are now using a more traditional <major>.<minor>.<patch> versioning scheme for the first time.

Also new in Jetty 12 is that the Servlet layer has been separated away from the Jetty Core layer.

The Servlet layer has been moved to the new Environments concept introduced with Jetty 12.

| Environment | Jakarta EE | Servlet | Jakarta Namespace | Jetty GroupID |

| ee8 | EE8 | 4 | javax.servlet | org.eclipse.jetty.ee8 |

| ee9 | EE9 | 5 | jakarta.servlet | org.eclipse.jetty.ee9 |